在pre10g的很长时间内,Oracle仅提供exp/imp导入导出工具,虽然这2个实用程序十分有效(现在也是如此),但因为它们受限于client/server模式工具自身的限制,以普通用户程序的身份来运转数以TB计的数据,其才不堪大用!DataPump是10g以后主推的数据抽取/导入工具,不同于exp/imp工具,DataPump是一个服务器端的实用程序,因为运行在服务器上故而DataPump进程可以直接访问数据文件与SGA(无需借shadow进程之手),与exp/imp工具相比使用DataPump可以获得显著的性能改善。DataPump可以通过直接路径或外部表路径这两种方法导出数据;其中直接路径避开了数据库高速缓存。当使用直接路径模式抽取数据时,DataPump从磁盘直接读取数据文件,抽取和格式化文件内容,最后将内容写出到转储文件。该种模式和SGA交互等待少,其导入导出速度直接取决于数据库所在磁盘速度和cpu;因此,直接路径极为快速。

外部表路径模式将使用到数据库的高速缓存buffer cache,通过外部表路径方法导出数据时,DataPump使用普通的SELECT操作将数据块从数据文件中读入buffer cache,为了写出转储文件,数据会在缓存中被格式化。通过外部表路径导入数据时,DataPump根据转储文件的内容构造标准的插入语句,并且通过将数据块从数据文件读至缓存来执行这些语句,插入操作按照标准的样式在缓存中完成;如同任何普通DML操作一样,外部表路径也会同时产生撤销和重做。

DataPump自身会根据对象的复杂性作出使用直接路径还是外部表路径的选择;对于较复杂的对象(后文将列出)而言,为了分解复杂性而必须同SGA进行交互,此情况下Data Pump只能采用外部表模式。我们还可以通过使用access_method参数来控制其行为,当然这仅在我们确认Data Pump作出了错误选择时才有必要。

| 若满足右列条件EXPDP将采用direct_path即直接路径模式 | 表结构允许使用直接路径模式,举例而言:

表上没有启用针对SELECT操作的fine-grained access control 非队列表(queue table) 表上没有BFILE和opaque类型的列,或包含有opaque列的对象类型 表上没有加密列 表上没有被废弃的旧类型列 若表上存在LONG或LONG RAW类型列,则此列只能是最后一列 需要导出的表或分区相对较少(多达250M),亦或者表或分区其实很大,但导出任务无法工作在并行模式(未指定parallel参数,或parallel参数设置为1) |

| 若满足右列条件EXPDP将采用external_table即外部表模式 | 数据结构不满足在直接路径模式下抽取的条件,举例而言:

表上启用了针对SELECT操作的精细粒度控制 队列表 表上包含了BFILE或opaque类型列,或者包含有opaque列的对象类型 表上存在加密列 表上存在被废弃的旧类型列 表上存在LONG或LONG RAW类型列,且不是最后列 数据结构满足使用直接路径模式的条件,但执行导出任务时相关表上指定了QUERY, SAMPLE, or REMAP_DATA等参数 数据结构满足使用直接路径模式的条件,但相关的表或分区相对较大(大于250M),此时并行SQL可以用来加速数据抽取 |

| 若满足右列条件IMPDP将采用direct_path即直接路径模式 | 数据结构满足使用直接路径模式的条件,举例而言:

当导入某单一表分区时该分区表上没有建立全局索引,这一点也包括分区的对象表 没有基于LOB列建立的域索引(domain index) 非cluster表 表上没有BFILE列或opaque类型列 表上没有嵌入了opaque类型的VARRAY列 表上没有加密列 没有启用补全日志(Supplemental logging)且表上没有LOB类型列 若导入表已预先建立了表建构,则需满足以下条件: 表上没有激活的触发器 并且 若是分区表则应有索引 并且 表上上没有启用针对INSERT操作的精细粒度控制 并且 表上除去check类型约束不存在其他类型约束 并且 表上没有unique的索引 执行导入任务时没有为相关表指定QUERY, REMAP_DATA等参数

需要导入的表或分区相对较小(少于250M),或者表或分区其实很大,但无法以并行模式导入(未指定parallel参数或指定其为1) |

| 若满足右列条件IMPDP将采用external_table即外部表路径 | 当数据结构不满足在直接路径模式下导入的条件,举例而言:

当导入某单一表分区时该分区表上建有全局索引,这一点也包括分区的对象表 表上有基于LOB列建立的域索引(domain index) cluster表 表上有BFILE列或opaque类型列 表上有嵌入了opaque类型的VARRAY列 表上有加密列 启用了补全日志且表上有至少一个LOB列 若导入表已预先建立了表建构,且满足以下条件: 表上有激活的触发器 或者 是分区表且没有任何索引 或者 表上启用了针对INSERT操作的精细粒度控制 或者 表上除去check类型约束还还有其他类型约束 或者 表上有unique的索引 执行导入任务时有为相关表指定QUERY, REMAP_DATA等参数

数据结构满足使用直接路径模式的条件,但相关的表或分区相对较大(大于250M),此时并行SQL可以用来加速数据导入 |

我们有必要深入了解一下外部表路径究竟是如何工作的:

SQL> select * from v$version;

BANNER

----------------------------------------------------------------

Oracle Database 10g Enterprise Edition Release 10.2.0.4.0 - 64bi

PL/SQL Release 10.2.0.4.0 - Production

CORE 10.2.0.4.0 Production

TNS for Linux: Version 10.2.0.4.0 - Production

NLSRTL Version 10.2.0.4.0 - Production

SQL> exec dbms_workload_repository.create_snapshot;

PL/SQL procedure successfully completed.

/* 执行expdp导出任务,TCACHE表大小为465M,这里显示指定了外部表路径 */

[maclean@rh2 ~]$ expdp maclean/maclean directory=dumps tables=TCACHE dumpfile=tcache1.dmp access_method=external_table

Export: Release 10.2.0.4.0 - 64bit Production on Tuesday, 24 August, 2010 20:31:48

Copyright (c) 2003, 2007, Oracle. All rights reserved.

Connected to: Oracle Database 10g Enterprise Edition Release 10.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

Starting "MACLEAN"."SYS_EXPORT_TABLE_07": maclean/******** directory=dumps tables=TCACHE dumpfile=tcache1.dmp access_method=external_table

Estimate in progress using BLOCKS method...

Processing object type TABLE_EXPORT/TABLE/TABLE_DATA

Total estimation using BLOCKS method: 544 MB

Processing object type TABLE_EXPORT/TABLE/TABLE

Processing object type TABLE_EXPORT/TABLE/STATISTICS/TABLE_STATISTICS

. . exported "MACLEAN"."TCACHE" 465.6 MB 4999999 rows

Master table "MACLEAN"."SYS_EXPORT_TABLE_07" successfully loaded/unloaded

******************************************************************************

Dump file set for MACLEAN.SYS_EXPORT_TABLE_07 is:

/s01/dump/tcache1.dmp

Job "MACLEAN"."SYS_EXPORT_TABLE_07" successfully completed at 20:32:18

/* 这次我们指定了并行度为4 */

[maclean@rh2 ~]$ expdp maclean/maclean directory=dumps tables=TCACHE dumpfile=tcache2.dmp,tcache3.dmp,tcache4.dmp,tcache5.dmp access_method=external_table parallel=4

Export: Release 10.2.0.4.0 - 64bit Production on Tuesday, 24 August, 2010 20:32:49

Copyright (c) 2003, 2007, Oracle. All rights reserved.

Connected to: Oracle Database 10g Enterprise Edition Release 10.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

Starting "MACLEAN"."SYS_EXPORT_TABLE_07": maclean/******** directory=dumps tables=TCACHE dumpfile=tcache2.dmp,tcache3.dmp,tcache4.dmp,tcache5.dmp access_method=external_table parallel=4

Estimate in progress using BLOCKS method...

Processing object type TABLE_EXPORT/TABLE/TABLE_DATA

Total estimation using BLOCKS method: 544 MB

Processing object type TABLE_EXPORT/TABLE/TABLE

Processing object type TABLE_EXPORT/TABLE/STATISTICS/TABLE_STATISTICS

. . exported "MACLEAN"."TCACHE" 465.6 MB 4999999 rows

Master table "MACLEAN"."SYS_EXPORT_TABLE_07" successfully loaded/unloaded

******************************************************************************

Dump file set for MACLEAN.SYS_EXPORT_TABLE_07 is:

/s01/dump/tcache2.dmp

/s01/dump/tcache3.dmp

/s01/dump/tcache4.dmp

Job "MACLEAN"."SYS_EXPORT_TABLE_07" successfully completed at 20:33:04

SQL> exec dbms_workload_repository.create_snapshot;

PL/SQL procedure successfully completed.

SQL> @?/rdbms/admin/awrrpt

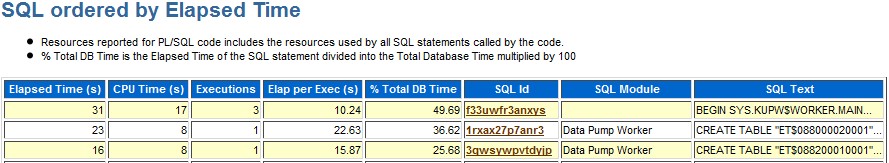

接着我们分析该时段内的awr报告!

从AWR报告中,我们不难找到以下语句:

SQL ID 3qwsywpvtdyjp:

CREATE TABLE "ET$088200010001" ( "OWNER", "OBJECT_NAME", "SUBOBJECT_NAME", "OBJECT_ID", "DATA_OBJECT_ID", "OBJECT_TYPE", "CREATED", "LAST_DDL_TIME", "TIMESTAMP", "STATUS", "TEMPORARY", "GENERATED", "SECONDARY" ) ORGANIZATION EXTERNAL ( TYPE ORACLE_DATAPUMP DEFAULT DIRECTORY DUMPS ACCESS PARAMETERS (DEBUG =0 DATAPUMP INTERNAL TABLE "MACLEAN"."TCACHE" JOB ( "MACLEAN", "SYS_EXPORT_TABLE_07", 1) WORKERID 1 PARALLEL 1 VERSION COMPATIBLE ENCRYPTPASSWORDISNULL ) LOCATION ('bogus.dat') ) PARALLEL 1 REJECT LIMIT UNLIMITED AS SELECT /*+ PARALLEL(KU$, 1) */ "OWNER", "OBJECT_NAME", "SUBOBJECT_NAME", "OBJECT_ID", "DATA_OBJECT_ID", "OBJECT_TYPE", "CREATED", "LAST_DDL_TIME", "TIMESTAMP", "STATUS", "TEMPORARY", "GENERATED", "SECONDARY" FROM RELATIONAL("MACLEAN"."TCACHE" ) KU$

SQL ID 1rxax27p7anr3:

CREATE TABLE "ET$088000020001" ( "OWNER", "OBJECT_NAME", "SUBOBJECT_NAME", "OBJECT_ID", "DATA_OBJECT_ID", "OBJECT_TYPE", "CREATED", "LAST_DDL_TIME", "TIMESTAMP", "STATUS", "TEMPORARY", "GENERATED", "SECONDARY" ) ORGANIZATION EXTERNAL ( TYPE ORACLE_DATAPUMP DEFAULT DIRECTORY DUMPS ACCESS PARAMETERS (DEBUG =0 DATAPUMP INTERNAL TABLE "MACLEAN"."TCACHE" JOB ( "MACLEAN", "SYS_EXPORT_TABLE_07", 1) WORKERID 2 PARALLEL 2 VERSION COMPATIBLE ENCRYPTPASSWORDISNULL ) LOCATION ('bogus.dat') ) PARALLEL 2 REJECT LIMIT UNLIMITED AS SELECT /*+ PARALLEL(KU$, 2) */ "OWNER", "OBJECT_NAME", "SUBOBJECT_NAME", "OBJECT_ID", "DATA_OBJECT_ID", "OBJECT_TYPE", "CREATED", "LAST_DDL_TIME", "TIMESTAMP", "STATUS", "TEMPORARY", "GENERATED", "SECONDARY" FROM RELATIONAL("MACLEAN"."TCACHE" ) KU$

以上就是Expdp在导出数据时使用的创建组织类型为ORACLE_DATAPUMP的外部表的SQL语句;后者语句中指定的并行度为2,而非我所指定的4,因该是Oracle考虑到所需导出表的具体大小,设置过高的并行度反而会适得其反,所以对设定值做了修正。

启用了并行的SQL 1rxax27p7anr3执行时间为22.63s,反而要比不使用并行慢;可见在TCACHE表这个数量级,完全没有必要使用并行导出。

接着我们来探究一下外部表路径的导入:

SQL> truncate table tcache;

Table truncated.

SQL> set pagesize 1400;

SQL> set linesize 140;

/* 执行外部表路径的数据导入,并行度1 */

[maclean@rh2 admin]$ impdp maclean/maclean directory=dumps tables=TCACHE content=data_only dumpfile=tcache1.dmp access_method=external_table

/* 在以上SQL执行期间查询V$SQL动态视图的相关语句*/

select sql_text,

sql_id,

cpu_time,

elapsed_time,

disk_reads,

buffer_gets,

rows_processed

from v$sql

where sql_text like '%TCACHE%'

and sql_text not like '%like%';

SQL_TEXT

--------------------------------------------------------------------------------------------------------------------------------------------

SQL_ID CPU_TIME ELAPSED_TIME DISK_READS BUFFER_GETS ROWS_PROCESSED

------------- ---------- ------------ ---------- ----------- --------------

INSERT /*+ SYS_DL_CURSOR */ INTO RELATIONAL("MACLEAN"."TCACHE") ("OWNER","OBJECT_NAME","SUBOBJECT_NAME","OBJECT_ID","DATA_OBJECT_ID","OBJECT

_TYPE","CREATED","LAST_DDL_TIME","TIMESTAMP","STATUS","TEMPORARY","GENERATED","SECONDARY") VALUES (NULL,NULL,NULL,NULL,NULL,NULL,NULL,NULL,N

ULL,NULL,NULL,NULL,NULL)

6tn47a220d34q 408 408 0 0 0

INSERT /*+APPEND+*/ /*+PARALLEL("TCACHE",1)+*/ INTO RELATIONAL("MACLEAN"."TCACHE" ) ("OWNER", "OBJECT_NAME", "SUBOBJECT_NAME", "OBJECT_ID",

"DATA_OBJECT_ID", "OBJECT_TYPE", "CREATED", "LAST_DDL_TIME", "TIMESTAMP", "STATUS", "TEMPORARY", "GENERATED", "SECONDARY") SELECT "OWNE

R", "OBJECT_NAME", "SUBOBJECT_NAME", "OBJECT_ID", "DATA_OBJECT_ID", "OBJECT_TYPE", "CREATED", "LAST_DDL_TIME", "TIMESTAMP", "STATUS", "TEMPO

RARY", "GENERATED", "SECONDARY" FROM "ET$087B00060001" KU$

66q4w8c4ak0wf 31576 31576 0 608 0

/* 当导入job完成后再次查询 */

select sql_text,

sql_id,

cpu_time,

elapsed_time,

disk_reads,

buffer_gets,

rows_processed

from v$sql

where sql_text like '%TCACHE%'

and sql_text not like '%like%';

SQL_TEXT

--------------------------------------------------------------------------------------------------------------------------------------------

SQL_ID CPU_TIME ELAPSED_TIME DISK_READS BUFFER_GETS ROWS_PROCESSED

------------- ---------- ------------ ---------- ----------- --------------

INSERT /*+ SYS_DL_CURSOR */ INTO RELATIONAL("MACLEAN"."TCACHE") ("OWNER","OBJECT_NAME","SUBOBJECT_NAME","OBJECT_ID","DATA_OBJECT_ID","OBJECT

_TYPE","CREATED","LAST_DDL_TIME","TIMESTAMP","STATUS","TEMPORARY","GENERATED","SECONDARY") VALUES (NULL,NULL,NULL,NULL,NULL,NULL,NULL,NULL,N

ULL,NULL,NULL,NULL,NULL)

6tn47a220d34q 408 408 0 0 0

/* 执行完成后一句SQL立马消失了!? Oracle好像很不情愿我们看到外部表路径使用的INSERT语句 :) */

INSERT /*+APPEND+*/ /*+PARALLEL("TCACHE",1)+*/

INTO RELATIONAL

("MACLEAN"."TCACHE")

("OWNER",

"OBJECT_NAME",

"SUBOBJECT_NAME",

"OBJECT_ID",

"DATA_OBJECT_ID",

"OBJECT_TYPE",

"CREATED",

"LAST_DDL_TIME",

"TIMESTAMP",

"STATUS",

"TEMPORARY",

"GENERATED",

"SECONDARY")

SELECT "OWNER",

"OBJECT_NAME",

"SUBOBJECT_NAME",

"OBJECT_ID",

"DATA_OBJECT_ID",

"OBJECT_TYPE",

"CREATED",

"LAST_DDL_TIME",

"TIMESTAMP",

"STATUS",

"TEMPORARY",

"GENERATED",

"SECONDARY"

FROM "ET$087F00230001" KU$

/* 这里的KU$代表的就是以ORACLE_DATAPUMP转储文件形式存放在数据库外的外部表 */

/* 可以看到这里尝试使用了APPEND插入模式,以减少undo和redo的产生*/

SQL> truncate table tcache;

Table truncated.

/* 若启用并行导入呢? */

[maclean@rh2 admin]$ impdp maclean/maclean directory=dumps tables=TCACHE content=data_only dumpfile=tcache2.dmp,tcache3.dmp,tcache4.dmp access_method=external_table parallel=3

........

select sql_text,

sql_id,

cpu_time,

elapsed_time,

disk_reads,

buffer_gets,

rows_processed

from v$sql

where sql_text like '%TCACHE%'

and sql_text not like '%like%';

SQL_TEXT

--------------------------------------------------------------------------------------------------------------------------------------------

SQL_ID CPU_TIME ELAPSED_TIME DISK_READS BUFFER_GETS ROWS_PROCESSED

------------- ---------- ------------ ---------- ----------- --------------

INSERT /*+APPEND+*/ /*+PARALLEL("TCACHE",1)+*/ INTO RELATIONAL("MACLEAN"."TCACHE" ) ("OWNER", "OBJECT_NAME", "SUBOBJECT_NAME", "OBJECT_ID",

"DATA_OBJECT_ID", "OBJECT_TYPE", "CREATED", "LAST_DDL_TIME", "TIMESTAMP", "STATUS", "TEMPORARY", "GENERATED", "SECONDARY") SELECT "OWNE

R", "OBJECT_NAME", "SUBOBJECT_NAME", "OBJECT_ID", "DATA_OBJECT_ID", "OBJECT_TYPE", "CREATED", "LAST_DDL_TIME", "TIMESTAMP", "STATUS", "TEMPO

RARY", "GENERATED", "SECONDARY" FROM "ET$087A00040001" KU$

2mvs15623ssvy 5910289 5910289 0 73578 0

INSERT /*+ SYS_DL_CURSOR */ INTO RELATIONAL("MACLEAN"."TCACHE") ("OWNER","OBJECT_NAME","SUBOBJECT_NAME","OBJECT_ID","DATA_OBJECT_ID","OBJECT

_TYPE","CREATED","LAST_DDL_TIME","TIMESTAMP","STATUS","TEMPORARY","GENERATED","SECONDARY") VALUES (NULL,NULL,NULL,NULL,NULL,NULL,NULL,NULL,N

ULL,NULL,NULL,NULL,NULL)

6tn47a220d34q 1167 1167 0 0 0

/* 似乎因为沿用了APPEND插入模式,插入语句的并行度并未上升 */

/* 因为Oracle不想同我们分享它的小秘密,所以awr和ash报告中都不记录外部表路径插入SQL的相关信息 */

/* 好吧,它有权保持沉默! */

That's great!

10046 level 12 trace:

INSERT /*+APPEND+*/ /*+PARALLEL(“TCACHE”,1)+*/ INTO RELATIONAL(“MACLEAN”.”TCACHE” ) (“OWNER”, “OBJECT_NAME”, “SUBOBJECT_NAME”, “OBJECT_ID”, “DATA_OBJECT_ID”, “OBJECT_TYPE”, “CREATED”, “LAST_DDL_TI

ME”, “TIMESTAMP”, “STATUS”, “TEMPORARY”, “GENERATED”, “SECONDARY”)

SELECT “OWNER”, “OBJECT_NAME”, “SUBOBJECT_NAME”, “OBJECT_ID”, “DATA_OBJECT_ID”, “OBJECT_TYPE”, “CREATED”, “LAST_DDL_TIME”, “TIMESTAMP”, “STATUS”, “TEMPORARY”, “GENERATED”, “SECONDARY”

FROM “ET$088400300001” KU$

call count cpu elapsed disk query current rows

——- —— ——– ———- ———- ———- ———- ———-

Parse 1 0.02 0.02 0 606 0 0

Execute 1 8.86 16.82 4 190 70755 4999999

Fetch 0 0.00 0.00 0 0 0 0

——- —— ——– ———- ———- ———- ———- ———-

total 2 8.89 16.84 4 796 70755 4999999

Misses in library cache during parse: 1

Optimizer mode: CHOOSE

Parsing user id: 64 (recursive depth: 2)

Rows Row Source Operation

——- —————————————————

1 LOAD AS SELECT (cr=973 pr=4 pw=68642 time=16956262 us)

4999999 EXTERNAL TABLE ACCESS FULL ET$088400300001 (cr=40 pr=0 pw=0 time=45824 us)

Elapsed times include waiting on following events:

Event waited on Times Max. Wait Total Waited

—————————————- Waited ———- ————

control file sequential read 4 0.00 0.00

Datapump dump file I/O 2329 0.00 0.25

db file sequential read 4 0.00 0.00

direct path write 2 0.00 0.00

Parallel Direct Load Insert DML

PURPOSE

——-

This document gives an overview of what is happening when a Direct-Load

Insert with Parallel DML (PDML) enabled is running against a non-partitioned

table.

SCOPE & APPLICATION

——————-

This article could be used by experienced database administrators and

data warehouse administrators for checking the behaviour of PDML statements.

Settings & Commands to run:

————————–

The following settings of extents (1Mb) have been selected in order to

make it easier to check the progress of the Insert statement.

init.ora -> sort_area_size=sort_area_retained_size=1048576 (1M)

Tablespace Temp file_id = 4 (from dba_data_files)

Tablespace Users_Data file_id = 9 (this is the tablespace of prod_table table)

SQL>alter tablespace Temp temporary;

SQL>alter tablespace Temp default storage (initial 1M next 1M pctincrease 0);

SQL> alter user test temporary tablespace Temp;

Login as user test from sqlplus.

SQL> create table test_pdml

SQL> tablespace users_data

SQL> storage (initial 1M next 1M pctincrease 0)

SQL> nologging

SQL> as select * from prod_table where 1=2;

SQL> alter table test_pdml parallel degree 4;

SQL> alter session enable parallel dml;

SQL> Insert /*+ Append parallel(test_pdml,4) */

SQL> into test_pdml

SQL> select from prod_table;

SQL> commit;

Check the Insert progress

—————————

The sqlplus session (sid=10) that issued the Insert

keeps a TX lock and a TM lock on the test_pdml table and also 4 PS locks

for each one of the Parallel Query servers.

Each one of the Parallel Query Server processes keeps a TX lock

and also a PS lock. We can join v$session and v$transaction and see

what open transactions exist.

The output of ‘select sid,type,id1,lmode,request from v$lock;’ will be as

follows:

SID TY ID1 LMODE REQUEST

———- — ———- ———- ———-

10 TX 65614 6 0

10 TM 30348 6 0

10 PS 1 4 0

10 PS 1 4 0

10 PS 1 4 0

10 PS 1 4 0

28 TX 327712 6 0

28 PS 1 4 0

32 TX 262171 6 0

32 PS 1 4 0

37 TX 458800 6 0

37 PS 1 4 0

38 TX 393236 6 0

38 PS 1 4 0

What is happening is the following:

a. First each parallel query server session uses SORT_AREA_SIZE memory.

Then TEMP segment in the TEMP tablespace is increasing if has not yet allocated

enough extents from previous sorts.

You can check its progress by:

SQL> select segment_name,segment_type,sum(bytes),count(*)

SQL> from dba_extents

SQL> where segment_type=’TEMPORARY’

SQL> group by segment_name,segment_type;

If the TEMPORARY segment in the TEMP tablespace has already allocated extents

from previous runs/sorts and you have not shutdown the database then you can

check its progress by querying views V$SORT_SEGMENT and V$SORT_USAGE

(see also Note:1039341.6)

SQL> select current_users,used_extents from v$sort_segment;

–> this will show how many users are using the TEMP segment in TEMP (temporary

tablespace) and how many extents are totally allocated)

SQL> select user,session_num,tablespace,extents from v$sort_usage;

–> this will show one row for each one the parallel query servers or other users

using sort segment

b. After TEMP segment usage reaches its maximum at USED_EXTENTS then 4 new

TEMPORARY segments (where 4 the number of Parallel Query Servers contributing

in the Parallel Insert) appear in the Users_Data Tablespace that use extents

allocation as defined in the test_pdml storage parameters.

You can check this by querying:

SQL> select segment_name,segment_type,sum(bytes),count(*)

SQL> from dba_extents

SQL> where segment_type=’TEMPORARY’

SQL> group by segment_name,segment_type;

–> now except for the default temp segment in the TEMP tablespace you will see

4 more temporary segments in the table tablespace.

c. When the statement finishes the table still has no new extents allocated,

checking the TEMPORARY segments, the default TEMP has returned USED_EXTENTS

back and there is only one TEMPORARY SEGMENT in the Users_Data tablespace that has

count(*) of extents the sum of all previous temporary segments and sum(bytes)

again their sum of extents

For example, run periodically the following selects:

SQL> select tablespace_name,segment_name,sum(bytes),count(*)

SQL> from dba_extents

SQL> where segment_type=’TEMPORARY’

SQL group by tablespace_name,segment_name;

/

SQL> select used_extents from v$sort_segment;

/

Sample outputs will be:

no rows selected

USED_EXTENTS

————

4

–> Temp segment in Temp tablespace starts to be used

TABLESPACE_NAME SEGMENT_NAME SUM(BYTES) COUNT(*)

————— ————— ———- ———-

TEMP 4.13578 11534336 11

USED_EXTENTS

————

20

–> temp segment in TEMP tablespace increases more and more

–> the difference between count(*) and used_extents is due to the fact that

–> the 2 selects are running at different times.

TABLESPACE_NAME SEGMENT_NAME SUM(BYTES) COUNT(*)

————— ————— ———- ———-

TEMP 4.13578 81788928 78

USED_EXTENTS

————

88

TABLESPACE_NAME SEGMENT_NAME SUM(BYTES) COUNT(*)

————— ————— ———- ———-

TEMP 4.13578 117440512 112

USED_EXTENTS

————

112

–> here temp segment in temp tablespace has reached its

–> maximum usage (it depends from the size of prod_table)

TABLESPACE_NAME SEGMENT_NAME SUM(BYTES) COUNT(*)

————— ————— ———- ———-

USERS_DATA 9.31238 6389760 6

USERS_DATA 9.32278 7454720 7

USERS_DATA 9.35658 6389760 6

USERS_DATA 9.36178 6389760 6

TEMP 4.13578 117440512 112

USED_EXTENTS

————

112

–> 4 new temp segments are created (one for each of the parallel query

–> servers that are used) but these segments appear in the users_data

–> tablespace in which the test_pdml table is created.

TABLESPACE_NAME SEGMENT_NAME SUM(BYTES) COUNT(*)

————— ————— ———- ———-

USERS_DATA 9.31238 13844480 13

USERS_DATA 9.32278 14909440 14

USERS_DATA 9.35658 14909440 14

USERS_DATA 9.36178 14909440 14

TEMP 4.13578 117440512 112

USED_EXTENTS

————

112

TABLESPACE_NAME SEGMENT_NAME SUM(BYTES) COUNT(*)

————— ————— ———- ———-

USERS_DATA 9.31238 19169280 18

USERS_DATA 9.32278 21299200 20

USERS_DATA 9.35658 21299200 20

USERS_DATA 9.36178 19169280 18

TEMP 4.13578 117440512 112

USED_EXTENTS

————

0

–> all data from the temp tablespace have been transferred to the

–> 4 temporary segments in the users_data tablespace and the temp

–> space in temp tablespace can be reused (used_extents=0)

TABLESPACE_NAME SEGMENT_NAME SUM(BYTES) COUNT(*)

————— ————— ———- ———-

USERS_DATA 9.35658 101064704 96

TEMP 4.13578 117440512 112

USED_EXTENTS

————

0

–> Insert statement has been finished so the 4 temp segments

–> were concatenated to just 1 temp segment in the users_data tablespace

–> no ‘commit’ has been issued at this time

TABLESPACE_NAME SEGMENT_NAME SUM(BYTES) COUNT(*)

————— ————— ———- ———-

TEMP 4.13578 117440512 112

USED_EXTENTS

————

0

–> this is just after the commit where the temp segment has disappeared

–> and all its extents have been added to the test_pdml table.

Check also v$session-wait:

Sid (10) of sqlplus client in the 1st phase changes between wait events :

– ‘PX deq credit: send blkd’

– ‘PX deq credit: need buffer’,

– ‘PX qref latch’,

– ‘db file scattered read’ from file_id#9 (v$session_wait.p1 = 9 )

(This is the users_data TS where prod_table exists).

Also the 4 parallel query servers (sids 28,32,27,38) change between :

— ‘PX deq: table q normal’

— ‘direct Path Write’ for file_id=4 (temp tablespace).

Then in the 2nd phase the sqlplus client changes to :

– ‘PX Deq: Execute Reply’

and the 4 parallel query servers to :

– ‘direct path read’ from file_id=4 (temp tablespace).

Issue the following query periodically:

SQL> select s.sid,s.status,s.program,w.event,w.p1,w.p2

SQL> from v$session s,v$transaction t,v$session_wait w

SQL> where s.taddr=t.addr and s.sid=w.sid;

Sample output at intervals will be:

SID STATUS PROGRAM EVENT P1 P2

— ——– ——————– ————————— ———- —–

32 ACTIVE ORACLE.EXE (P001) PX Deq: Table Q Normal 200 1

38 ACTIVE ORACLE.EXE (P000) PX Deq: Table Q Normal 200 1

28 ACTIVE ORACLE.EXE (P002) PX Deq: Table Q Normal 200 1

37 ACTIVE ORACLE.EXE (P003) PX Deq: Table Q Normal 200 1

10 ACTIVE SQLPLUSW.EXE db file scattered read 9 17034

SID STATUS PROGRAM EVENT P1 P2

— ——– ——————– ————————— ———- —–

32 ACTIVE ORACLE.EXE (P001) direct path write 4 7950

38 ACTIVE ORACLE.EXE (P000) direct path write 4 11342

28 ACTIVE ORACLE.EXE (P002) direct path write 4 3978

37 ACTIVE ORACLE.EXE (P003) direct path write 4 186

10 ACTIVE SQLPLUSW.EXE PX Deq Credit: need buffer 268500992 2

SID STATUS PROGRAM EVENT P1 P2

— ——– ——————– ————————— ———- —–

32 ACTIVE ORACLE.EXE (P001) PX Deq: Table Q Normal 200 1

38 ACTIVE ORACLE.EXE (P000) PX Deq: Table Q Normal 200 1

28 ACTIVE ORACLE.EXE (P002) PX Deq: Table Q Normal 200 1

37 ACTIVE ORACLE.EXE (P003) PX Deq: Table Q Normal 200 1

10 ACTIVE SQLPLUSW.EXE db file scattered read 9 18578

SID STATUS PROGRAM EVENT P1 P2

— ——– ——————– ————————— ———- —–

32 ACTIVE ORACLE.EXE (P001) direct path write 4 1664

38 ACTIVE ORACLE.EXE (P000) PX Deq: Table Q Normal 200 1

28 ACTIVE ORACLE.EXE (P002) PX Deq: Table Q Normal 200 2

37 ACTIVE ORACLE.EXE (P003) direct path write 4 3820

10 ACTIVE SQLPLUSW.EXE PX Deq Credit: send blkd 268500993 2

SID STATUS PROGRAM EVENT P1 P2

— ——– ——————– ————————— ———- —–

32 ACTIVE ORACLE.EXE (P001) direct path write 4 21230

38 ACTIVE ORACLE.EXE (P000) PX Deq: Table Q Normal 200 1

28 ACTIVE ORACLE.EXE (P002) PX Deq: Table Q Normal 200 2

37 ACTIVE ORACLE.EXE (P003) direct path write 4 7510

10 ACTIVE SQLPLUSW.EXE PX Deq Credit: send blkd 268500993 2

SID STATUS PROGRAM EVENT P1 P2

— ——– ——————– ————————— ———- —–

32 ACTIVE ORACLE.EXE (P001) PX Deq: Table Q Normal 200 2

38 ACTIVE ORACLE.EXE (P000) PX Deq: Table Q Normal 200 1

28 ACTIVE ORACLE.EXE (P002) PX Deq: Table Q Normal 200 1

37 ACTIVE ORACLE.EXE (P003) PX Deq: Table Q Normal 200 1

10 ACTIVE SQLPLUSW.EXE PX qref latch 1 1

SID STATUS PROGRAM EVENT P1 P2

— ——– ——————– ————————— ———- —–

32 ACTIVE ORACLE.EXE (P001) PX Deq: Table Q Normal 200 1

38 ACTIVE ORACLE.EXE (P000) PX Deq: Table Q Normal 200 2

28 ACTIVE ORACLE.EXE (P002) PX Deq: Table Q Normal 200 1

37 ACTIVE ORACLE.EXE (P003) PX Deq: Table Q Normal 200 1

10 ACTIVE SQLPLUSW.EXE db file scattered read 9 15596

SID STATUS PROGRAM EVENT P1 P2

— ——– ——————– ————————— ———- —–

32 ACTIVE ORACLE.EXE (P001) direct path read 4 7962

38 ACTIVE ORACLE.EXE (P000) direct path read 4 11394

28 ACTIVE ORACLE.EXE (P002) direct path read 4 3983

37 ACTIVE ORACLE.EXE (P003) direct path read 4 197

10 ACTIVE SQLPLUSW.EXE PX Deq: Execute Reply 200 2

SID STATUS PROGRAM EVENT P1 P2

— ——– ——————– ————————— ———- —–

32 ACTIVE ORACLE.EXE (P001) direct path read 4 1477

38 ACTIVE ORACLE.EXE (P000) direct path read 4 60

28 ACTIVE ORACLE.EXE (P002) direct path read 4 9946

37 ACTIVE ORACLE.EXE (P003) direct path read 4 8327

10 ACTIVE SQLPLUSW.EXE PX Deq: Execute Reply 200 32

SID STATUS PROGRAM EVENT P1 P2

— ——– ——————– ————————— ———- —–

32 INACTIVE ORACLE.EXE (P001) PX Deq: Execution Msg 268566527 1

38 INACTIVE ORACLE.EXE (P000) PX Deq: Execution Msg 268566527 1

28 INACTIVE ORACLE.EXE (P002) PX Deq: Execution Msg 268566527 1

37 INACTIVE ORACLE.EXE (P003) PX Deq: Execution Msg 268566527 1

10 INACTIVE SQLPLUSW.EXE SQL*Net message from client 1413697536 1

no rows selected

Checking the history of extents on the table you now see that it has taken all

extents of the TEMPORARY segment and the last extent of each one

of each PQ server is trimmed.

Check with statement:

SQL> select extent_id,bytes from user_extents where segment_name=’TEST_PDML’

SQL> order by extent_id;

EXTENT_ID BYTES

———- ———-

0 1064960

1 1064960

2 1064960

3 1064960

4 1064960

5 1064960

…………………..

23 1064960

24 770048 <– last extent of PQ server 1 trimmed

25 1064960

26 1064960

27 1064960

……………………

46 1064960

47 1064960

48 770048 <– last extent from PQ server 2 trimmed

49 1064960

50 1064960

51 1064960

………………….

69 1064960

70 1064960

71 1064960

72 770048 <– last extent from PQ server 3 trimmed

73 1064960

74 1064960

……………………

94 1064960

95 1064960

96 778240 <– last extent from PQ server 4 trimmed

97 rows selected.