这2天在面试DBA Candidate的时候,我问到Oracle RAC中Brain Split脑裂决议的一些概念, 几乎所有的Candidate都告诉我当”只有2个节点的时候,投票算法就失效了,会让2个节点去抢占Quorum Disk,最先获得的节点将活下来” 。 我们姑且把这套理论叫做” 抢占论”。

“抢占论”的具体观点可能与下面这一段文字大同小异:

“在集群中,节点间通过某种机制(心跳)了解彼此的健康状态,以确保各节点协调工作。 假设只有”心跳”出现问题, 各个节点还在正常运行, 这时,每个节点都认为其他的节点宕机了, 自己是整个集群环境中的”唯一建在者”,自己应该获得整个集群的”控制权”。 在集群环境中,存储设备都是共享的, 这就意味着数据灾难, 这种情况就是”脑裂”

解决这个问题的通常办法是使用投票算法(Quorum Algorithm). 它的算法机理如下:观点1:

集群中各个节点需要心跳机制来通报彼此的”健康状态”,假设每收到一个节点的”通报”代表一票。对于三个节点的集群,正常运行时,每个节点都会有3票。 当结点A心跳出现故障但节点A还在运行,这时整个集群就会分裂成2个小的partition。 节点A是一个,剩下的2个是一个。 这是必须剔除一个partition才能保障集群的健康运行。 对于有3个节点的集群, A 心跳出现问题后, B 和 C 是一个partion,有2票, A只有1票。 按照投票算法, B 和C 组成的集群获得控制权, A 被剔除。

观点2:

如果只有2个节点,投票算法就失效了。 因为每个节点上都只有1票。 这时就需要引入第三个设备:Quorum Device. Quorum Device 通常采用饿是共享磁盘,这个磁盘也叫作Quorum disk。 这个Quorum Disk 也代表一票。 当2个结点的心跳出现问题时, 2个节点同时去争取Quorum Disk 这一票, 最早到达的请求被最先满足。 故最先获得Quorum Disk的节点就获得2票。另一个节点就会被剔除。“

以上这段文字描述中观点1 与我在<Oracle RAC Brain Split Resolution> 一文中提出的看法其实是类似的。 这里再列出我的描述:

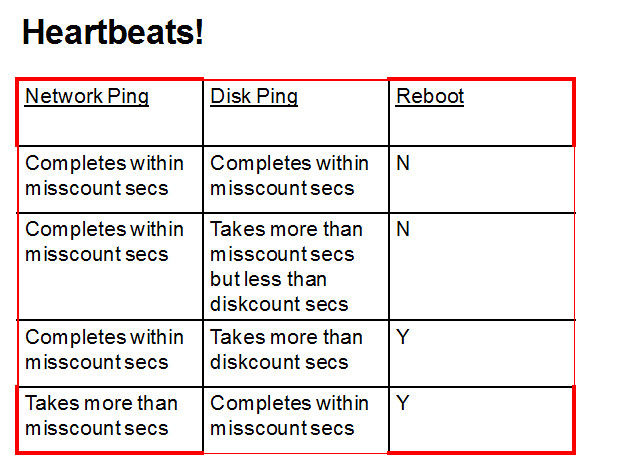

在脑裂检查阶段Reconfig Manager会找出那些没有Network Heartbeat而有Disk Heartbeat的节点,并通过Network Heartbeat(如果可能的话)和Disk Heartbeat的信息来计算所有竞争子集群(subcluster)内的节点数目,并依据以下2种因素决定哪个子集群应当存活下去:

- 拥有最多节点数目的子集群(Sub-cluster with largest number of Nodes)

- 若子集群内数目相等则为拥有最低节点号的子集群(Sub-cluster with lowest node number),举例来说在一个2节点的RAC环境中总是1号节点会获胜。

补充:关于 我引入的子集群的概念的介绍:

“在解决脑裂的场景中,NM还会监控voting disk以了解其他的竞争子集群(subclusters)。关于子集群我们有必要介绍一下,试想我们的环境中存在大量的节点,以Oracle官方构建过的128个节点的环境为我们的想象空间,当网络故障发生时存在多种的可能性,一种可能性是全局的网络失败,即128个节点中每个节点都不能互相发生网络心跳,此时会产生多达128个的信息”孤岛”子集群。另一种可能性是局部的网络失败,128个节点中被分成多个部分,每个部分中包含多于一个的节点,这些部分就可以被称作子集群(subclusters)。当出现网络故障时子集群内部的多个节点仍能互相通信传输投票信息(vote mesg),但子集群或者孤岛节点之间已经无法通过常规的Interconnect网络交流了,这个时候NM Reconfiguration就需要用到voting disk投票磁盘。”

争议主要体现在 , “抢占论” 认为当 只有2个节点时 是通过抢占votedisk 的结果来决定具体哪个节点存活下来,同时” 抢占论”没有介绍 当存在多个相同节点数目的子集群情况下的结论(譬如4节点的RAC , 1、2节点组成一个子集群,3、4节点组成一个子集群), 若按照2节点时的做法那么依然是通过子集群间抢占votedisk来决定。

我个人认为这种说法(“抢占论”)是错误的,不管是具体脑裂时的CRS关键进程css的日志,还是Oracle官方的内部文档都可以说明该问题。

我们来看10.2 RAC中的一个场景,假设集群中共有3个节点,其中1号实例没有被启动,集群中只有2个活动节点(active node),发生2号节点的网络失败的故障,因2号节点的member number较小故其通过voting disk向3号节点发起驱逐,具体日志如下:

观察红色部分的日志 ,明确显示了NM(Node Monitor)节点监控服务检查votedisk信息,并计算出了smaller cluster size 以下为2号节点的ocssd.log日志 [ CSSD]2011-04-23 17:42:32.022 [3032460176] > TRACE: clssnmCheckDskInfo: node 3, vrh3, state 5 with leader 3 has smaller cluster size 1; my cluster size 1 with leader 2 检查voting disk后发现子集群3为最小"子集群"(3号节点的node number较2号大);2号节点为最大子集群 [ CSSD]2011-04-23 17:42:32.022 [3032460176] >TRACE: clssnmEvict: Start [ CSSD]2011-04-23 17:42:32.022 [3032460176] >TRACE: clssnmEvict: Evicting node 3, vrh3, birth 3, death 13, impendingrcfg 1, stateflags 0x40d [ CSSD]2011-04-23 17:42:32.022 [3032460176] >TRACE: clssnmSendShutdown: req to node 3, kill time 1643084 发起对3号节点的驱逐和shutdown request 以下为3号节点的ocssd.log日志: [ CSSD]2011-04-23 17:43:15.913 [3032460176] >ERROR: clssnmCheckDskInfo: Aborting local node to avoid splitbrain. [ CSSD]2011-04-23 17:43:15.913 [3032460176] >ERROR: : my node(3), Leader(3), Size(1) VS Node(2), Leader(2), Size(1) 读取voting disk后发现kill block,为避免split brain,自我aborting!

此外Metalink 上一些官方Note 也明确说明了我以上的观点 , 摘录部分内容如下:

| 1. When interconnect breaks – keeps the largest cluster possible up, other nodes will be evicted, in 2 node cluster lowest number node remains. 2. |

实际上有部分Vendor Unix Clusterware集群软件的脑裂可能如确实是以谁先获得 “Quorum disk”为决定因素, 但是自10g 推出的Oracle 自己的Real Application Cluster(RAC) 的clusterware 或者说 CRS( cluster ready services) 在Brain Split Resolution时并非如此,在这方面类推并不能帮助我们找出正确的结论。