drm 是Oracle rac中独有的动态资源管理操作, 我们听了很多关于DRM的理论, 但是你是否亲眼见证过DRM, 今天我们就来看一下:

SQL> select * from v$version; BANNER -------------------------------------------------------------------------------- Oracle Database 11g Enterprise Edition Release 11.2.0.3.0 - 64bit Production PL/SQL Release 11.2.0.3.0 - Production CORE 11.2.0.3.0 Production TNS for Linux: Version 11.2.0.3.0 - Production NLSRTL Version 11.2.0.3.0 - Production SQL> SQL> select count(*) from gv$instance; COUNT(*) ---------- 2 SQL> create table drm_mac(t1 int) tablespace users; Table created. SQL> insert into drm_mac values(1); 1 row created. SQL> commit; Commit complete. SQL> alter system flush buffer_cache; System altered. SQL>select * from drm_mac; T1 ---------- 1 SQL> select object_id,data_object_id from dba_objects where object_name='DRM_MAC'; OBJECT_ID DATA_OBJECT_ID ---------- -------------- 64046 64046 --FIRST FIND THE OBJECT IDS With OBJ_IDS As (Select DATA_Object_Id OBJECT_ID From Dba_Objects Where Object_Name = 'DRM_MAC'), --注意替换这里!!!!!!!!!!!!!!!!! Addr As (Select /*+ materialize */ Le_Addr, class, state From X$Bh, OBJ_IDS Where Object_Id = Obj), --NOW GET THE RESOURCE NAME IN HEX Hexnames As (Select Rtrim(B.Kjblname, ' '||chr(0)) Hexname From X$Le A, X$Kjbl B, Addr Where A.Le_Kjbl=B.Kjbllockp and class = 1 and state <> 3 And A.Le_Addr = Addr.Le_Addr) -- NOW FIND THE NODE MASTERS Select A.Master_Node Mast, Count(*) From Gv$Dlm_Ress A, Hexnames H Where Rtrim(A.Resource_Name, ' '||chr(0)) = H.Hexname Group by A.Master_Node; MAST COUNT(*) ---------- ---------- 0 5 ==> 说明DRM_MAC MASTER 在0节点,共5个BLOCk 登录 2节点 对2节点的LMS进程做DRM TRACE oradebug setospid 29295; oradebug event trace[RAC_DRM] disk highest; 之后使用 lkdebug -m pkey data_object_id 来手动出发对DRM_MAC的REMASTER操作 注意即便是手动触发DRM也仅仅是将该OBJECT放入DRM ENQUEUE *** 2012-11-27 09:27:20.728 Processing Oradebug command 'lkdebug -m pkey 64045' ***************************************************************** GLOBAL ENQUEUE SERVICE DEBUG INFORMATION ---------------------------------------- Queued DRM request for pkey 64045.0, tobe master 1, flags x1 ***************** End of lkdebug output ************************* Processing Oradebug command 'lkdebug -m pkey 64045' ***************************************************************** GLOBAL ENQUEUE SERVICE DEBUG INFORMATION ---------------------------------------- kjdrrmq: pkey 64045.0 is already on the affinity request queue, old tobe == new tobe 1 ***************** End of lkdebug output ************************* SQL> oradebug setmypid Statement processed. SQL> oradebug lkdebug -m pkey 64046; --FIRST FIND THE OBJECT IDS With OBJ_IDS As (Select DATA_Object_Id OBJECT_ID From Dba_Objects Where Object_Name = 'DRM_MAC'), --Customize Addr As (Select /*+ materialize */ Le_Addr, class, state From X$Bh, OBJ_IDS Where Object_Id = Obj), --NOW GET THE RESOURCE NAME IN HEX Hexnames As (Select Rtrim(B.Kjblname, ' '||chr(0)) Hexname From X$Le A, X$Kjbl B, Addr Where A.Le_Kjbl=B.Kjbllockp and class = 1 and state <> 3 And A.Le_Addr = Addr.Le_Addr) -- NOW FIND THE NODE MASTERS Select A.Master_Node Mast, Count(*) From Gv$Dlm_Ress A, Hexnames H Where Rtrim(A.Resource_Name, ' '||chr(0)) = H.Hexname Group by A.Master_Node; MAST COUNT(*) ---------- ---------- 1 5 通过 oradebug lkdebug -m pkey 将 MACLEAN_DRM REMASTER到2节点。 SQL> select * from v$gcspfmaster_info where DATA_OBJECT_ID=64045; FILE_ID DATA_OBJECT_ID GC_MASTERIN CURRENT_MASTER PREVIOUS_MASTER REMASTER_CNT ---------- -------------- ----------- -------------- --------------- ------------ 0 64046 Affinity 1 0 1 SQL> select * from gv$policy_history where DATA_OBJECT_ID=64046 order by EVENT_DATE; no rows selected SQL> select * from x$object_policy_statistics; no rows selected *** 2013-11-15 04:08:35.191 2013-11-15 04:08:35.191065 : kjbldrmnextpkey: AFFINITY pkey 64046.0 pkey 64046.0 undo 0 stat 5 masters[1, 1->2] reminc 13 RM# 18 flg x7 type x0 afftime x8b0628ee, acquire time 0 nreplays by lms 0 = 0 benefit 0, total 0, remote 0, cr benefit 0, cr total 0, cr remote 0 kjblpkeydrmqscchk: Begin for pkey 64046/0 bkt[0->8191] read-mostly 0 kjblpkeydrmqscchk: End for pkey 64046.0 2013-11-15 04:08:35.191312 : DRM(20) quiesced basts [0-8191] 2013-11-15 04:08:35.197815 : * lms acks DRMFRZ (8191) * lms 0 finished parallel drm freeze in DRM(20) window 1, pcount 47 DRM(20) win(1) lms 0 finished drm freeze 2013-11-15 04:08:35.200436 : * kjbrrcres: In this window: 278 GCS resources, 0 remastered * In all windows: 278 GCS resources, 0 remastered 2013-11-15 04:08:35.201606 : kjbldrmnextpkey: AFFINITY pkey 64046.0 pkey 64046.0 undo 0 stat 5 masters[1, 1->2] reminc 13 RM# 18 flg x7 type x0 afftime x8b0628ee, acquire time 0 nreplays by lms 0 = 0 benefit 0, total 0, remote 0, cr benefit 0, cr total 0, cr remote 0 kjbldrmrpst: no more locks for pkey 64046.0, #lk 0, #rm 0, #replay 0, #dup 0 objscan 4 DRM(20) Completed replay (highb 8191) DRM(20) win(1) lms 0 finished replaying gcs resources kjbmprlst: DRMFRZ replay msg from 1 (clockp 0x92f96c18, 3) 0x1fe6a.5, 64046.0, x0, lockseq 0x13c5, reqid 0x3 state 0x100, 1 -> 0, role 0x0, wrt 0x0.0 GCS RESOURCE 0xb28963a0 hashq [0xbb4cdd38,0xbb4cdd38] name[0x1fe6a.5] pkey 64046.0 grant 0xb19a8370 cvt (nil) send (nil)@0,0 write (nil),0@65536 flag 0x0 mdrole 0x1 mode 1 scan 0.0 role LOCAL disk: 0x0000.00000000 write: 0x0000.00000000 cnt 0x0 hist 0x0 xid 0x0000.000.00000000 sid 0 pkwait 0s rmacks 0 refpcnt 0 weak: 0x0000.00000000 pkey 64046.0 undo 0 stat 5 masters[1, 1->2] reminc 13 RM# 18 flg x7 type x0 afftime x8b0628ee, acquire time 0 nreplays by lms 0 = 0 benefit 0, total 0, remote 0, cr benefit 0, cr total 0, cr remote 0 hv 3 [stat 0x0, 1->1, wm 32768, RMno 0, reminc 3, dom 0] kjga st 0x4, step 0.36.0, cinc 15, rmno 19, flags 0x20 lb 0, hb 8191, myb 8141, drmb 8141, apifrz 1 GCS SHADOW 0xb19a8370,4 resp[0xb28963a0,0x1fe6a.5] pkey 64046.0 grant 1 cvt 0 mdrole 0x1 st 0x100 lst 0x40 GRANTQ rl LOCAL master 2 owner 1 sid 0 remote[0x92f96c18,3] hist 0x10c1430c0218619 history 0x19.0xc.0x6.0x1.0xc.0x6.0x5.0x6.0x1.0x0. cflag 0x0 sender 0 flags 0x0 replay# 0 abast (nil).x0.1 dbmap (nil) disk: 0x0000.00000000 write request: 0x0000.00000000 pi scn: 0x0000.00000000 sq[0xb28963d0,0xb28963d0] msgseq 0x1 updseq 0x0 reqids[3,0,0] infop (nil) lockseq x13c5 GCS SHADOW END GCS RESOURCE END 2013-11-15 04:08:35.204351 : 0 write requests issued in 72 GCS resources 0 PIs marked suspect, 0 flush PI msgs 2013-11-15 04:08:35.204493 : 0 write requests issued in 99 GCS resources 0 PIs marked suspect, 0 flush PI msgs 2013-11-15 04:08:35.204648 : 0 write requests issued in 54 GCS resources 0 PIs marked suspect, 0 flush PI msgs 2013-11-15 04:08:35.204790 : 0 write requests issued in 35 GCS resources 0 PIs marked suspect, 0 flush PI msgs 2013-11-15 04:08:35.204948 : 0 write requests issued in 18 GCS resources 0 PIs marked suspect, 0 flush PI msgs 2013-11-15 04:08:35.205048 : 0 write requests issued in 0 GCS resources 0 PIs marked suspect, 0 flush PI msgs 2013-11-15 04:08:35.205327 : 0 write requests issued in 0 GCS resources 0 PIs marked suspect, 0 flush PI msgs 2013-11-15 04:08:35.205590 : 0 write requests issued in 0 GCS resources 0 PIs marked suspect, 0 flush PI msgs * kjbrrcfwst: resp x0xb28963a0, id1 x1fe6a, drm 2013-11-15 04:08:35.205799 : 0 write requests issued in 1 GCS resources 0 PIs marked suspect, 0 flush PI msgs DRM(20) win(1) lms 0 finished fixing gcs write protocol 2013-11-15 04:08:35.207051 : kjbldrmnextpkey: AFFINITY pkey 64046.0 pkey 64046.0 undo 0 stat 5 masters[1, 1->2] reminc 13 RM# 18 flg x7 type x0 afftime x8b0628ee, acquire time 0 nreplays by lms 0 = 0 benefit 0, total 0, remote 0, cr benefit 0, cr total 0, cr remote 0 kjblpkeydrmqscchk: Begin for pkey 64046/0 bkt[8192->16383] read-mostly 0 kjblpkeydrmqscchk: End for pkey 64046.0 2013-11-15 04:08:35.207595 : DRM(20) quiesced basts [8192-16383] 2013-11-15 04:08:35.209146 : * lms acks DRMFRZ (16383) * lms 0 finished parallel drm freeze in DRM(20) window 2, pcount 47 DRM(20) win(2) lms 0 finished drm freeze 2013-11-15 04:08:35.211327 : * kjbrrcres: In this window: 256 GCS resources, 0 remastered * In all windows: 534 GCS resources, 0 remastered 2013-11-15 04:08:35.214797 : kjbldrmnextpkey: AFFINITY pkey 64046.0 pkey 64046.0 undo 0 stat 5 masters[1, 1->2] reminc 13 RM# 18 flg x7 type x0 afftime x8b0628ee, acquire time 0 nreplays by lms 0 = 0 benefit 0, total 0, remote 0, cr benefit 0, cr total 0, cr remote 0 kjbldrmrpst: no more locks for pkey 64046.0, #lk 0, #rm 0, #replay 0, #dup 0 objscan 4 DRM(20) Completed replay (highb 16383) DRM(20) win(2) lms 0 finished replaying gcs resources kjbmprlst: DRMFRZ replay msg from 1 (clockp 0x92f96d28, 3) 0x1fe6f.5, 64046.0, x0, lockseq 0x13ca, reqid 0x3 state 0x100, 1 -> 0, role 0x0, wrt 0x0.0 GCS RESOURCE 0xb2896520 hashq [0xbb4edd38,0xbb4edd38] name[0x1fe6f.5] pkey 64046.0 grant 0xb19a3750 cvt (nil) send (nil)@0,0 write (nil),0@65536 flag 0x0 mdrole 0x1 mode 1 scan 0.0 role LOCAL disk: 0x0000.00000000 write: 0x0000.00000000 cnt 0x0 hist 0x0 xid 0x0000.000.00000000 sid 0 pkwait 0s rmacks 0 refpcnt 0 weak: 0x0000.00000000 pkey 64046.0 undo 0 stat 5 masters[1, 1->2] reminc 13 RM# 18 flg x7 type x0 afftime x8b0628ee, acquire time 0 nreplays by lms 0 = 0 SQL> alter system set "_serial_direct_read"=never; System altered. SQL> select count(*) from large_drms; COUNT(*) ---------- 999999 SQL> select blocks from dba_tables where table_name='LARGE_DRMS'; BLOCKS ---------- 13233 SQL> select object_id,data_object_id from dba_objects where object_name='LARGE_DRMS'; OBJECT_ID DATA_OBJECT_ID ---------- -------------- 64474 64474 With OBJ_IDS As (Select DATA_Object_Id OBJECT_ID From Dba_Objects Where Object_Name = 'LARGE_DRMS'), --Customize Addr As (Select /*+ materialize */ Le_Addr, class, state From X$Bh, OBJ_IDS Where Object_Id = Obj), --NOW GET THE RESOURCE NAME IN HEX Hexnames As (Select Rtrim(B.Kjblname, ' '||chr(0)) Hexname From X$Le A, X$Kjbl B, Addr Where A.Le_Kjbl=B.Kjbllockp and class = 1 and state <> 3 And A.Le_Addr = Addr.Le_Addr) -- NOW FIND THE NODE MASTERS Select A.Master_Node Mast, Count(*) From Gv$Dlm_Ress A, Hexnames H Where Rtrim(A.Resource_Name, ' '||chr(0)) = H.Hexname Group by A.Master_Node; MAST COUNT(*) ---------- ---------- 1 6605 0 6473 oradebug setmypid oradebug lkdebug -m pkey 64474; MAST COUNT(*) ---------- ---------- 1 13078

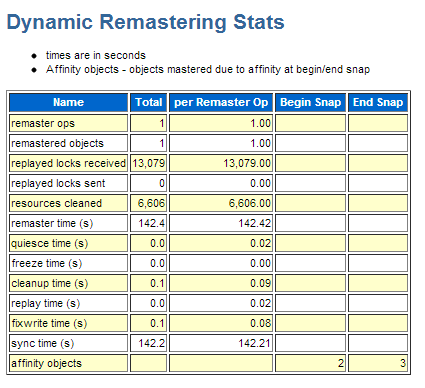

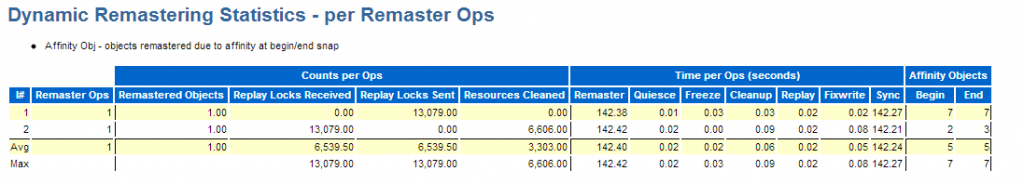

DRM一张103MB大小的表可以通过AWR了解因为DRM操作而消耗的带宽等资源:

其中来源于RAC AWR的AWRGRPT报告的ininterconnect_device_statistics显示了正确的DRM网络流量, 而普通AWRRPT中的Interconnect Device Statistics似乎并不能正式反映网络流量。