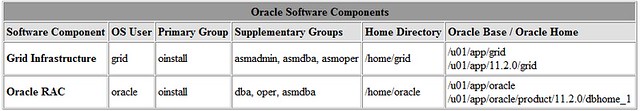

在《【视频教学】Maclean教你用Vbox在Linux 6.3上安装Oracle 11gR2 RAC》 中我们介绍了在11.2.0.3 Grid Infrastructure GI环境下安装11.2.0.3.5 14727347 补丁的步骤; 由于该11.2.0.3.5的opatch auto安装会有问题,所以我们使用手动的opatch apply安装该补丁,以下是检验步骤:

14727347解压后包含了2个补丁目录14727310和15876003 ,已包含11.2.0.3.5 DB的PSU,下载了该GI PSU后无需再去下载DB 的PSU了。

PSU 14727347的下载地址

1) rootcrs.pl 停止本节点的服务,若有RDBMS DB在运行则首先关闭该实例

su – oracle

$ <ORACLE_HOME>/bin/srvctl stop database –d <db-unique-name>

su – root

$GRID_HOME/crs/install/rootcrs.pl -unlock

2) 给GI HOME打补丁

AIX上: su – root; slibclean

su – grid

opatch napply -oh $GRID_HOME -local /tmp/patch/14727310

opatch napply -oh $GRID_HOME -local /tmp/patch/15876003/

3) 给RDBMS DB HOME打补丁

su – oracle

[oracle@vmac1 scripts]$ /tmp/patch/15876003/custom/server/15876003/custom/scripts/prepatch.sh -dbhome $ORACLE_HOME

/tmp/patch/15876003/custom/server/15876003/custom/scripts/prepatch.sh completed successfully.

opatch napply -oh $ORACLE_HOME -local /tmp/patch/15876003/custom/server/15876003

opatch napply -oh $ORACLE_HOME -local /tmp/patch/14727310

/tmp/patch/15876003/custom/server/15876003/custom/scripts/postpatch.sh -dbhome $ORACLE_HOME

4)执行rootcrs.pl -patch

su – root

[root@vmac1 ~]# /g01/11ggrid/app/11.2.0/grid/rdbms/install/rootadd_rdbms.sh

[root@vmac1 ~]# /g01/11ggrid/app/11.2.0/grid/crs/install/rootcrs.pl -patch

5) 在2节点上重复以上步骤

6)对于现有的DB 执行升级字典操作

cd $ORACLE_HOME/rdbms/admin

sqlplus /nolog

SQL> CONNECT / AS SYSDBA

SQL> STARTUP

SQL> @catbundle.sql psu apply

SQL> QUIT

7)执行utlrp 脚本 并重启DB

SQL> @?/rdbms/admin/utlrp

SQL> shutdown immediate;

SQL> startup;

8) 重启应用程序