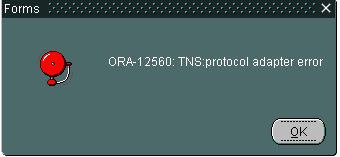

以下这个Action Script是我收集的在解决ORA-125XX(如ORA-12560)这类网络链接故障时的一些思路,主要包括 现有的网络配置(client & server side)、监听日志、SQLNET Client trace等信息– How to troubleshooting ORA-125** connection issues:

# CHECK FIREWALL, WINDOWS FIREWALL , ANTI-Virus Software First !

ping hostname

tnsping TNS

trcroute TNS

telnet <hostname> <port>

tracert hostname

client side

sqlplus scott/tiger@TNS

&

server side

sqlplus scott/tiger@TNS

cat /etc/hosts

cat /etc/resolv.conf

cat /etc/nsswitch.conf

ipconfig -a

ping 127.0.0.1

$ORACLE_HOME/network/admin/sqlnet.ora

$ORACLE_HOME/network/admin/tnsnames.ora

$ORACLE_HOME/network/admin/listener.ora

$ORACLE_HOME/network/admin/endpoints_listener.ora

$ORACLE_HOME/network/log/*

sqlnet.log listener.log

/var/log/messages

/var/adm/messages

errpt -a

ls -ld $ORACLE_HOME

netstat -rn

ps -ef | grep -i tns

lsnrctl status {listener_name}

lsnrvtl services {listener_name}

ulimit -a

1. Complete database alert log.

2. If the database was not restarted from the time of last occurance of the

issue,

select * from v$resource_limit

3. RAM and SWAP configured on the server.

4. ulimit settings for oracle user:

ulimit -aS

ulimit -aH

5. Kernel parameter settings:

/etc/sysctl.conf

dblogin

show parameter cluster_database

show parameter listener

$srvctl config vip -n {nodename}

$lsnrctl status listener

agent.log and the crsd.log ..

crsd agent log and the crsd.log

$crsctl getperm resource ora.LISTENER.lsnr

sql net client trace , Client side tracing is done by adding the following syntax to the client’s sqlnet.ora file:

We will need a timestamped matching set of client/listener sqlnet traces while error is reproduced in order to find the root cause of the issue.

++ Enable client sqlnet tracing.

=======================

To do this add the following to client sqlnet.ora:

TRACE_LEVEL_CLIENT=16

TRACE_UNIQUE_CLIENT=TRUE

TRACE_DIRECTORY_CLIENT=path

TRACE_FILE_CLIENT=client

TRACE_TIMESTAMP_CLIENT=ON

replace path with a local directory for the trace files. (for example c:\temp)

Do a test connection from the problematic client and check if the trace files are created.

Upload the traces containing the error to me on metalink.

++ Enable listener sqlnet tracing.

==========================

To do this edit the listener.ora and add,

TRACE_LEVEL_{listener name}=16

TRACE_TIMESTAMP_{listener name}=TRUE

TRACE_DIRECTORY_{listener name}=/tmp {– this can be any directory other than a top level directory like / or c:\

Replace {listener name} with the name of the listener. For example if your listener was called LISTENER then TRACE_LEVEL_LISTENER=16

You need to restrict the amount of disk space used by the tracing then you must also set,

TRACE_FILELEN_{listener name}=500000 {– size of the files in K

TRACE_FILENO_{listener name}=10 {– number of files

This will limit the traces to 10 files of around 500Mb, so 5000Mb in total. When the 10th file is full it will reuse file number one.

You will need to stop/start the listener for this to take effect.

When the problem reproduces please can you upload the listener trace and the listener log.

Trace_level_client=16

Trace_directory_client={path_to_the_trace_directory} # use the full path to the trace directory

Trace_unique_client=on

Trace_timestamp_client=on

Diag_adr_enabled=off

trace Local listener or SCAN listeners

TRACE_LEVEL_{listener_name}= 16

TRACE_TIMESTAMP_{listener_name}=on

TRACE_DIRECTORY_{listener_name}={path_to_the_trace_directory}

truss -o /tmp/lisener.out -fae lsnrctl start {listener_name}

Some Useful Note:

Note.444705.1 TroubleShooting Guide For ORA-12514 TNS listener could not resolve SERVICE_NAME given in connect descriptor

Note.761740.1 Technicians Unable To Receive Orders While MWM Components Display ODBC Errors And Are Connected

Note.119007.1 ORA-12560: Administering the Listener on UNIX – Troubleshooting

Note 276812.1 TNS-12542 Error When Executing Batch Jobs or in High Transaction Environment

Note.219208.1 Ext/Pub Client Connection via Connect Manager Fails with TNS-12564

Note.393941.1 Ext/Mod ORA-12564 Reported When Using 10g Connection Manager

Note.1116960.1 ORA-609 TNS-12537 and TNS-12547 in 11g Alert.log

Note.550859.1 Abstract TROUBLESHOOTING GUIDE TNS-12518 TNS listener could not hand off client connection.

Note.207303.1 Client / Server / Interoperability Support Between Different Oracle Versions

Note.119706.1 Troubleshooting Guide TNS-12535 or ORA-12535 or ORA-12170 Errors

For database links between different Oracle versions connections must be supported in BOTH directions in the matrix found in Note 207303.1

eg: As 9.2 -} 7.3.4 is not supported then database links between these version

are not supported in either direction.

You are trying to connect two versions (client-server) that are not certified (as confirmed by Note 207303.1) and between which exist many technical incompatibilities.

CLIENT — LISTENER — SERVER RESULT

8 11.1 8 OK

9 11.1 9 OK

10 11.1 10 OK

11 11.1 11 OK

8 11.2 8 FAILS

9 11.2 9 OK

10 11.2 10 OK

11 11.2 11 OK

9 11.1 8 OK

10 11.1 8 OK

11 11.1 8 OK

9 11.2 8 FAILS

10 11.2 8 FAILS

11 11.2 8 FAILS

The relevant relationship that appears to be at issue is LISTENER and DATABASE. Client version is not a factor.

But if the ultimate outcome is that the 11.2 (11gR2) LISTENER is indicated (though I still haven’t seen documentation of this) as not compatible with use on a ORACLE 8i (8.1.7.0) DATABASE, then we’ll capture that here and move on. I would, however, like to see some evidence of this, if it is available. I can find notes in the KB about 10gR2’s listener not supporting 8i database, and I can find notes about 11gR1 having resolved that regression. But I can find nothing regarding listener/database compatibility that mentions 11gR2, that would explain our results.

Clients should be complied with Servers , For Sever 11.2 the only supported clients are 11.2.0 , 11.1.0 , 10.2.0 : 10g end MUST be at 10.2.0.2 (or higher) respectively in order to use PLSQL between those versions. See Note:4511371.8 for more details and finally 10.1.0.5 only with extended support .

On the other Side in order to connect from listener to DB server in a supported way , Listener version should be greater than or equal to the server version .

Note 207303.1 should still be followed.