从10g的clusterware到11g Release2的Grid Infrastructure,Oracle往RAC这个框架里塞进了太多东西。虽然照着Step by Step Installation指南步步为营地去安装11.2.0.1的GI,但在实际执行root.sh脚本的时候,不免又要出现这样那样的错误。例如下面的一例:

[root@rh3 grid]# ./root.sh

Running Oracle 11g root.sh script...

The following environment variables are set as:

ORACLE_OWNER= maclean

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The file "dbhome" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]: The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root.sh script.

Now product-specific root actions will be performed.

2011-03-28 20:43:13: Parsing the host name

2011-03-28 20:43:13: Checking for super user privileges

2011-03-28 20:43:13: User has super user privileges

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

LOCAL ADD MODE

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

Adding daemon to inittab

CRS-4123: Oracle High Availability Services has been started.

ohasd is starting

ADVM/ACFS is not supported on oraclelinux-release-5-5.0.2

一个节点上的root.sh脚本运行居然提示说ADVM/ACFS不支持OEL 5.5,但实际上Redhat 5或者OEL 5是目前仅有的少数支持ACFS的平台(The ACFS install would be on a supported Linux release – either Oracle Enterprise Linux 5 or Red Hat 5)。

检索Metalink发现这是一个Linux平台上的Bug 9474252: ‘ACFSLOAD START’ RETURNS “ADVM/ACFS IS NOT SUPPORTED ON DHL-RELEASE-…”。

因为以上Not Supported错误信息在另外一个节点(也是Enterprise Linux Enterprise Linux Server release 5.5 (Carthage)) 运行root.sh脚本时并未出现,那么一般只要找出2个节点间的差异就可能解决问题了:

未出错节点上release相关rpm包的情况 [maclean@rh6 tmp]$ cat /etc/issue Enterprise Linux Enterprise Linux Server release 5.5 (Carthage) Kernel \r on an \m [maclean@rh6 tmp]$ rpm -qa|grep release enterprise-release-notes-5Server-17 enterprise-release-5-0.0.22 出错节点上release相关rpm包的情况 [root@rh3 tmp]# rpm -qa | grep release oraclelinux-release-5-5.0.2 enterprise-release-5-0.0.22 enterprise-release-notes-5Server-17

以上可以看到相比起没有出错的节点,出错节点上多安装了一个名为oraclelinux-release-5-5.0.2的rpm包,我们尝试来卸载该rpm是否能解决问题,补充实际上该问题也可以通过修改/tmp/.linux_release文件的内容为enterprise-release-5-0.0.17来解决,而无需如我们这里做的卸载名为oraclelinux-release-5*的rpm软件包:

[root@rh3 install]# rpm -e oraclelinux-release-5-5.0.2

[root@rh3 grid]# ./root.sh

Running Oracle 11g root.sh script...

The following environment variables are set as:

ORACLE_OWNER= maclean

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The file "dbhome" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root.sh script.

Now product-specific root actions will be performed.

2011-03-28 20:57:21: Parsing the host name

2011-03-28 20:57:21: Checking for super user privileges

2011-03-28 20:57:21: User has super user privileges

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

CRS is already configured on this node for crshome=0

Cannot configure two CRS instances on the same cluster.

Please deconfigure before proceeding with the configuration of new home.

再次在失败节点上运行root.sh,被提示告知需要首先deconfigure然后才能再次配置。在官方文档<Oracle Grid Infrastructure Installation Guide 11g Release 2>中介绍了如何反向配置11g release 2中的Grid Infrastructure(Deconfiguring Oracle Clusterware Without Removing Binaries):

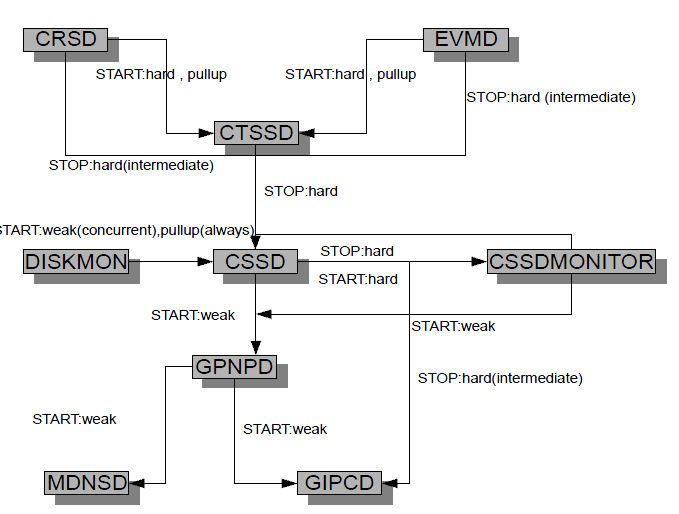

/* 同为管理Grid Infra所以仍需要root用户来执行以下操作 */ [root@rh3 grid]# pwd /u01/app/11.2.0/grid /* 目前位于GRID_HOME目录下 */ [root@rh3 grid]# cd crs/install /* 以-deconfig选项执行一个名为rootcrs.pl的脚本 */ [root@rh3 install]# ./rootcrs.pl -deconfig 2011-03-28 21:03:05: Parsing the host name 2011-03-28 21:03:05: Checking for super user privileges 2011-03-28 21:03:05: User has super user privileges Using configuration parameter file: ./crsconfig_params VIP exists.:rh3 VIP exists.: //192.168.1.105/255.255.255.0/eth0 VIP exists.:rh6 VIP exists.: //192.168.1.103/255.255.255.0/eth0 GSD exists. ONS daemon exists. Local port 6100, remote port 6200 eONS daemon exists. Multicast port 20796, multicast IP address 234.227.83.81, listening port 2016 Please confirm that you intend to remove the VIPs rh3 (y/[n]) y ACFS-9200: Supported CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rh3' CRS-2673: Attempting to stop 'ora.crsd' on 'rh3' CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'rh3' CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'rh3' CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'rh3' succeeded CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'rh3' has completed CRS-2677: Stop of 'ora.crsd' on 'rh3' succeeded CRS-2673: Attempting to stop 'ora.mdnsd' on 'rh3' CRS-2673: Attempting to stop 'ora.gpnpd' on 'rh3' CRS-2673: Attempting to stop 'ora.cssdmonitor' on 'rh3' CRS-2673: Attempting to stop 'ora.ctssd' on 'rh3' CRS-2673: Attempting to stop 'ora.evmd' on 'rh3' CRS-2677: Stop of 'ora.cssdmonitor' on 'rh3' succeeded CRS-2677: Stop of 'ora.mdnsd' on 'rh3' succeeded CRS-2677: Stop of 'ora.gpnpd' on 'rh3' succeeded CRS-2677: Stop of 'ora.evmd' on 'rh3' succeeded CRS-2677: Stop of 'ora.ctssd' on 'rh3' succeeded CRS-2673: Attempting to stop 'ora.cssd' on 'rh3' CRS-2677: Stop of 'ora.cssd' on 'rh3' succeeded CRS-2673: Attempting to stop 'ora.diskmon' on 'rh3' CRS-2673: Attempting to stop 'ora.gipcd' on 'rh3' CRS-2677: Stop of 'ora.gipcd' on 'rh3' succeeded CRS-2677: Stop of 'ora.diskmon' on 'rh3' succeeded CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rh3' has completed CRS-4133: Oracle High Availability Services has been stopped. Successfully deconfigured Oracle clusterware stack on this node /* 如果以上deconfig操作未能成功反向配置那么可以以-FORCE选项执行rootcrs.pl脚本 */ [root@rh3 install]# ./rootcrs.pl -deconfig -force 2011-03-28 21:41:00: Parsing the host name 2011-03-28 21:41:00: Checking for super user privileges 2011-03-28 21:41:00: User has super user privileges Using configuration parameter file: ./crsconfig_params VIP exists.:rh3 VIP exists.: //192.168.1.105/255.255.255.0/eth0 VIP exists.:rh6 VIP exists.: //192.168.1.103/255.255.255.0/eth0 GSD exists. ONS daemon exists. Local port 6100, remote port 6200 eONS daemon exists. Multicast port 20796, multicast IP address 234.227.83.81, listening port 2016 ACFS-9200: Supported CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rh3' CRS-2673: Attempting to stop 'ora.crsd' on 'rh3' CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'rh3' CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'rh3' CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'rh3' succeeded CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'rh3' has completed CRS-2677: Stop of 'ora.crsd' on 'rh3' succeeded CRS-2673: Attempting to stop 'ora.mdnsd' on 'rh3' CRS-2673: Attempting to stop 'ora.gpnpd' on 'rh3' CRS-2673: Attempting to stop 'ora.cssdmonitor' on 'rh3' CRS-2673: Attempting to stop 'ora.ctssd' on 'rh3' CRS-2673: Attempting to stop 'ora.evmd' on 'rh3' CRS-2677: Stop of 'ora.cssdmonitor' on 'rh3' succeeded CRS-2677: Stop of 'ora.mdnsd' on 'rh3' succeeded CRS-2677: Stop of 'ora.gpnpd' on 'rh3' succeeded CRS-2677: Stop of 'ora.evmd' on 'rh3' succeeded CRS-2677: Stop of 'ora.ctssd' on 'rh3' succeeded CRS-2673: Attempting to stop 'ora.cssd' on 'rh3' CRS-2677: Stop of 'ora.cssd' on 'rh3' succeeded CRS-2673: Attempting to stop 'ora.diskmon' on 'rh3' CRS-2673: Attempting to stop 'ora.gipcd' on 'rh3' CRS-2677: Stop of 'ora.gipcd' on 'rh3' succeeded CRS-2677: Stop of 'ora.diskmon' on 'rh3' succeeded CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rh3' has completed CRS-4133: Oracle High Availability Services has been stopped. Successfully deconfigured Oracle clusterware stack on this node /* 所幸以上这招总是能够奏效,否则岂不是每次都要完全卸载后重新安装GI? */

顺利完成以上反向配置CRS后,就可以再次尝试运行多灾多难的root.sh了:

[root@rh3 grid]# pwd

/u01/app/11.2.0/grid

[root@rh3 grid]# ./root.sh

Running Oracle 11g root.sh script...

The following environment variables are set as:

ORACLE_OWNER= maclean

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The file "dbhome" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root.sh script.

Now product-specific root actions will be performed.

2011-03-28 21:07:29: Parsing the host name

2011-03-28 21:07:29: Checking for super user privileges

2011-03-28 21:07:29: User has super user privileges

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

LOCAL ADD MODE

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

Adding daemon to inittab

CRS-4123: Oracle High Availability Services has been started.

ohasd is starting

FATAL: Module oracleoks not found.

FATAL: Module oracleadvm not found.

FATAL: Module oracleacfs not found.

acfsroot: ACFS-9121: Failed to detect /dev/asm/.asm_ctl_spec.

acfsroot: ACFS-9310: ADVM/ACFS installation failed.

acfsroot: ACFS-9311: not all components were detected after the installation.

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node rh6, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

CRS-2672: Attempting to start 'ora.mdnsd' on 'rh3'

CRS-2676: Start of 'ora.mdnsd' on 'rh3' succeeded

CRS-2672: Attempting to start 'ora.gipcd' on 'rh3'

CRS-2676: Start of 'ora.gipcd' on 'rh3' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'rh3'

CRS-2676: Start of 'ora.gpnpd' on 'rh3' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rh3'

CRS-2676: Start of 'ora.cssdmonitor' on 'rh3' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rh3'

CRS-2672: Attempting to start 'ora.diskmon' on 'rh3'

CRS-2676: Start of 'ora.diskmon' on 'rh3' succeeded

CRS-2676: Start of 'ora.cssd' on 'rh3' succeeded

CRS-2672: Attempting to start 'ora.ctssd' on 'rh3'

CRS-2676: Start of 'ora.ctssd' on 'rh3' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'rh3'

CRS-2676: Start of 'ora.crsd' on 'rh3' succeeded

CRS-2672: Attempting to start 'ora.evmd' on 'rh3'

CRS-2676: Start of 'ora.evmd' on 'rh3' succeeded

/u01/app/11.2.0/grid/bin/srvctl start vip -i rh3 ... failed

Preparing packages for installation...

cvuqdisk-1.0.7-1

Configure Oracle Grid Infrastructure for a Cluster ... failed

Updating inventory properties for clusterware

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 5023 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /s01/oraInventory

'UpdateNodeList' was successful.

以上虽然绕过了”ADVM/ACFS is not supported”的问题,但又出现了”FATAL: Module oracleoks/oracleadvm/oracleacfs not found”,Linux下ACFS/ADVM相关加载Module无法找到的问题,查了下metalink发现这是GI 11.2.0.2中2个被确认的bug 10252497或bug 10266447,而实际我所安装的是11.2.0.1版本的GI…….. 好了,所幸我目前的环境是使用NFS的存储,所以如ADVM/ACFS这些存储选项的问题可以忽略不计,准备在11.2.0.2上再测试下。

不得不说11.2.0.1版本GI的安装存在太多的问题,以至于Oracle Support不得不撰写了不少相关故障诊断的文档,例如:<Troubleshooting 11.2 Grid Infastructure Installation Root.sh Issues [ID 1053970.1]>,<How to Proceed from Failed 11gR2 Grid Infrastructure (CRS) Installation [ID 942166.1]>。目前为止还没体验过11.2.0.2的GI,希望它不像上一个版本那么糟糕!