11.2.0.2已经release 1年多了,相对于11.2.0.1要稳定很多。现在我们为客户部署新系统的时候一般都会推荐直接装11.2.0.2(out of place),并打到<Oracle Recommended Patches — Oracle Database>所推荐的PSU。

对于现有的系统则推荐在停机窗口允许的前提下尽可能升级到11.2.0.2上来,当然客户也可以更耐心的等待11.2.0.3版本的release。

针对11.2.0.1到11.2.0.2上的升级工程,其与10g中的升级略有区别。对于misson-critical的数据库必须进行有效的升级演练和备份操作,因为Oracle数据库软件的升级一直是一项复杂的工程,并且具有风险,不能不慎。

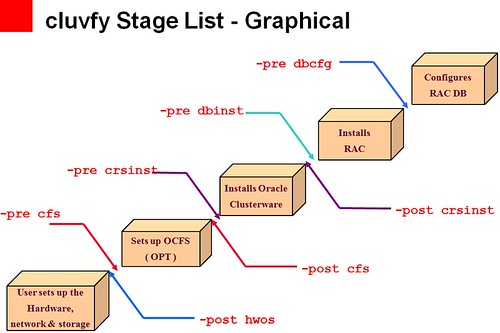

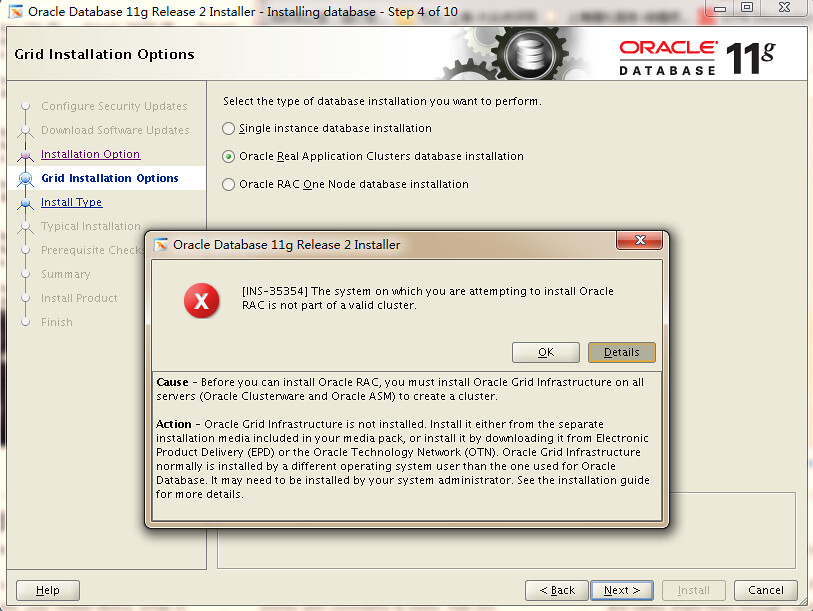

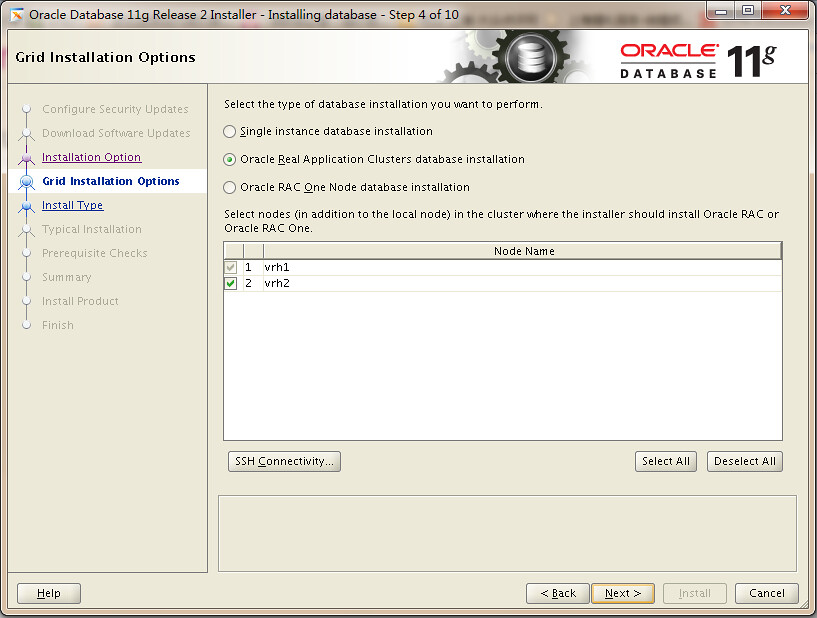

同时RAC数据库的升级又要较single-instance单实例的升级来的复杂,主要可以分成以下步骤:

1. 若使用Exadata Database Machine硬件,首先要检查是否需要升级Exadata Storage Software和Infiniband Switch的版本,<Database Machine and Exadata Storage Server 11g Release 2 (11.2) Supported Versions>

2. 完成rolling upgrade Grid Infrastructure的准备工作

3.滚动升级Gird Infrastructure GI软件

4.完成升级RDBMS数据库软件的准备工作

5.具体升级RDBMS数据库软件,包括升级数据字典、并编译失效对象等

这里我们重点介绍的是滚动升级GI/CRS集群软件的准备工作和具体升级步骤,因为11.2.0.2是11gR2的第一个Patchset,且又是首个out of place的大补丁集,所以绝大多数人对新的升级模式并不熟悉。

升级GI的准备工作

1.注意从11.2.0.1 GI/CRS滚动升级(rolling upgrade)到 11.2.0.2时可能出现意外错误,具体见<Pre-requsite for 11.2.0.1 to 11.2.0.2 ASM Rolling Upgrade>,这里一并引用:

Applies to: Oracle Server - Enterprise Edition - Version: 11.2.0.1.0 to 11.2.0.2.0 - Release: 11.2 to 11.2 Oracle Server - Enterprise Edition - Version: 11.2.0.1 to 11.2.0.2 [Release: 11.2 to 11.2] Information in this document applies to any platform. Purpose This note is to clarify the patch requirement when doing 11.2.0.1 to 11.2.0.2 rolling upgrade. Scope and Application Intended audience includes DBA, support engineers. Pre-requsite for 11.2.0.1 to 11.2.0.2 ASM Rolling Upgrade There has been some confusion as what patches need to be applied for 11.2.0.1 ASM rolling upgrade to 11.2.0.2 to be successful. Documentation regarding this is not very clear (at the time of writing) and a documentation bug has been filed and documentation will be updated in the future. There are two bugs related to 11.2.0.1 ASM rolling upgrade to 11.2.0.2: Unpublished bug 9413827: 11201 TO 11202 ASM ROLLING UPGRADE - OLD CRS STACK FAILS TO STOP Unpublished bug 9706490: LNX64-11202-UD 11201 -> 11202, DG OFFLINE AFTER RESTART CRS STACK DURING UPGRADE Some of the symptoms include error message when running rootupgrade.sh: ORA-15154: cluster rolling upgrade incomplete (from bug: 9413827) or Diskgroup status is shown offline after the upgrade, crsd.log may have: 2010-05-12 03:45:49.029: [ AGFW][1506556224] Agfw Proxy Server sending the last reply to PE for message:RESOURCE_START[ora.MYDG1.dg rwsdcvm44 1] ID 4098:1526 TextMessage[CRS-2674: Start of 'ora.MYDG1.dg' on 'rwsdcvm44' failed] TextMessage[ora.MYDG1.dg rwsdcvm44 1] ora.MYDG1.dg rwsdcvm44 1: To overcome this issue, there are two actions you need to take: a). apply proper patch. b). change crsconfig_lib.pm Applying Patch: 1). If $GI_HOME is on version 11.2.0.1.2 (i.e GI PSU2 is applied): Action: You can apply Patch:9706490 for version 11.2.0.1.2. Unpublished bug 9413827 is fixed in 11.2.0.1.2 GI PSU2. Patch:9706490 for version 11.2.0.1.2 is built on top of 11.2.0.1.2 GI PSU2 (i.e. includes the 11.2.0.1.2 GI PSU2, hence includes the fix for 9413827). Applying Patch:9706490 includes both fixes. opatch will recognize 9706490 is superset of 11.2.0.1.2 GI PSU2 (Patch: 9655006) and rollback patch 9655006 before applying Patch: 9706490). 2). If $GI_HOME is on version 11.2.0.1.0 (i.e. no GI PSU applied). Action: You can apply Patch:9706490 for version 11.2.0.1.2. This would make sure you have applied 11.2.0.1.2 GI PSU2 plus both 9706490 and 9413827 (which is included in GI PSU2). For platforms that do not have 11.2.0.1.2 GI PSU, then you can apply patch 9413827 on 11.2.0.1.0. 3). If $GI_HOME is on version 11.2.0.1.1 (GI PSU1) (this is rare since GI PSU1 was only released for Linux platforms and was quite old). Action: You can rollback GI PSU1 then apply Patch:9706490 on version 11.2.0.1.2 if your platform has 11.2.0.1.2 GI PSU. If your platform does not have 11.2.0.1.2GI PSU, then apply patch 9413827. Modify crsconfig_lib.pm After patch is applied, modify $11.2.0.2_GI_HOME/crs/install/crsconfig_lib.pm: Before the change: # grep for bugs 9655006 or 9413827 @cmdout = grep(/(9655006|9413827)/, @output); After the change: # grep for bugs 9655006 or 9413827 or 9706490 @cmdout = grep(/(9655006|9413827|9706490)/, @output); This would prevent rootupgrade.sh from failing when it validates the pre-requsite patches.

这里我们假设环境中的11.2.0.1 GI没有apply任何PSU补丁,为了解决这一”11201 TO 11202 ASM ROLLING UPGRADE – OLD CRS STACK FAILS TO STOP” bug,并成功滚动升级GI,需要在正式升级11.2.0.2 Patchset之前apply 9413827 bug的对应patch。

此外我们还推荐使用最新的opatch工具以避免出现11.2.0.1上opatch无法识别相关patch的问题。

所以我们为了升级GI到11.2.0.2,需要先从MOS下载 3个对应平台(platform)的补丁包,它们是

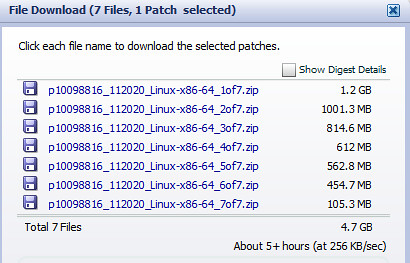

1. 11.2.0.2.0 PATCH SET FOR ORACLE DATABASE SERVER (Patchset)(patchid:10098816),注意实际上11.2.0.2的这个Patchset由多达7个zip文件组成,如在Linux x86-64平台上:

其中升级我们只需要下载1-3的zip包即可,第一、二包是RDBMS Database软件的out of place Patchset,而第三个包为Grid Infrastructure/CRS软件的out of place Patchset,实际在本篇文章(只升级GI)中仅会用到p10098816_112020_Linux-x86-64_3of7.zip这个压缩包。

2. Patch 9413827: 11201 TO 11202 ASM ROLLING UPGRADE – OLD CRS STACK FAILS TO STOP(patchid:9413827)

3. Patch 6880880: OPatch 11.2 (patchid:6880880),最新的opatch工具

2. 在所有节点上安装最新的opatch工具,该步骤不需要停止任何服务:

切换到GI拥有者用户,并移动原有的Opatch目录,将新的Opatch安装到CRS_HOME su - grid [grid@vrh1 ~]$ mv $CRS_HOME/OPatch $CRS_HOME/OPatch_old [grid@vrh1 ~]$ unzip /tmp/p6880880_112000_Linux-x86-64.zip -d $CRS_HOME 确认opatch版本 [grid@vrh1 ~]$ $CRS_HOME/OPatch/opatch Invoking OPatch 11.2.0.1.6 Oracle Interim Patch Installer version 11.2.0.1.6 Copyright (c) 2011, Oracle Corporation. All rights reserved.

3. 在所有节点上滚动安装BUNDLE Patch for Base Bug 9413827补丁包:

1.切换到GI拥有者用户,并确认已经安装的补丁

su - grid

opatch lsinventory -detail -oh $CRS_HOME

Invoking OPatch 11.2.0.1.6

Oracle Interim Patch Installer version 11.2.0.1.6

Copyright (c) 2011, Oracle Corporation. All rights reserved.

Oracle Home : /g01/11.2.0/grid

Central Inventory : /g01/oraInventory

from : /etc/oraInst.loc

OPatch version : 11.2.0.1.6

OUI version : 11.2.0.1.0

Log file location : /g01/11.2.0/grid/cfgtoollogs/opatch/opatch2011-09-04_19-08-33PM.log

Lsinventory Output file location :

/g01/11.2.0/grid/cfgtoollogs/opatch/lsinv/lsinventory2011-09-04_19-08-33PM.txt

--------------------------------------------------------------------------------

Installed Top-level Products (1):

Oracle Grid Infrastructure 11.2.0.1.0

There are 1 products installed in this Oracle Home.

........................

###########################################################################

2. 解压之前下载的 p9413827_11201_$platform.zip的补丁包

unzip p9413827_112010_Linux-x86-64.zip

###########################################################################

3. 切换到DB HOME拥有者身份,在本地节点上停止RDBMS DB HOME相关的资源:

su - oracle

语法:

% [RDBMS_HOME]/bin/srvctl stop home -o [RDBMS_HOME] -s [status file location] -n [node_name]

srvctl stop home -o $ORACLE_HOME -n vrh1 -s stop_db_res

cat stop_db_res

db-vprod

hostname

www.askmac.cn

###########################################################################

4. 切换到root用户执行rootcrs.pl -unlock 命令

[root@vrh1 ~]# $CRS_HOME/crs/install/rootcrs.pl -unlock

2011-09-04 20:46:53: Parsing the host name

2011-09-04 20:46:53: Checking for super user privileges

2011-09-04 20:46:53: User has super user privileges

Using configuration parameter file: /g01/11.2.0/grid/crs/install/crsconfig_params

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'vrh1'

CRS-2673: Attempting to stop 'ora.crsd' on 'vrh1'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'vrh1'

CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'vrh1'

CRS-2673: Attempting to stop 'ora.SYSTEMDG.dg' on 'vrh1'

CRS-2673: Attempting to stop 'ora.registry.acfs' on 'vrh1'

CRS-2673: Attempting to stop 'ora.DATA.dg' on 'vrh1'

CRS-2673: Attempting to stop 'ora.FRA.dg' on 'vrh1'

CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.vrh1.vip' on 'vrh1'

CRS-2677: Stop of 'ora.vrh1.vip' on 'vrh1' succeeded

CRS-2672: Attempting to start 'ora.vrh1.vip' on 'vrh2'

CRS-2677: Stop of 'ora.registry.acfs' on 'vrh1' succeeded

CRS-2676: Start of 'ora.vrh1.vip' on 'vrh2' succeeded

CRS-2677: Stop of 'ora.SYSTEMDG.dg' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.FRA.dg' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.DATA.dg' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'vrh1'

CRS-2677: Stop of 'ora.asm' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.ons' on 'vrh1'

CRS-2673: Attempting to stop 'ora.eons' on 'vrh1'

CRS-2677: Stop of 'ora.ons' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.net1.network' on 'vrh1'

CRS-2677: Stop of 'ora.net1.network' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.eons' on 'vrh1' succeeded

CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'vrh1' has completed

CRS-2677: Stop of 'ora.crsd' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.gpnpd' on 'vrh1'

CRS-2673: Attempting to stop 'ora.cssdmonitor' on 'vrh1'

CRS-2673: Attempting to stop 'ora.ctssd' on 'vrh1'

CRS-2673: Attempting to stop 'ora.evmd' on 'vrh1'

CRS-2673: Attempting to stop 'ora.asm' on 'vrh1'

CRS-2673: Attempting to stop 'ora.mdnsd' on 'vrh1'

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'vrh1'

CRS-2677: Stop of 'ora.cssdmonitor' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.gpnpd' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.evmd' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.mdnsd' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.ctssd' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.drivers.acfs' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.asm' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'vrh1'

CRS-2677: Stop of 'ora.cssd' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.diskmon' on 'vrh1'

CRS-2673: Attempting to stop 'ora.gipcd' on 'vrh1'

CRS-2677: Stop of 'ora.gipcd' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.diskmon' on 'vrh1' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'vrh1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

Successfully unlock /g01/11.2.0/grid

###########################################################################

5.以RDBMS HOME拥有者用户执行patch目录下的prepatch.sh脚本

su - oracle

% custom/server/9413827/custom/scripts/prepatch.sh -dbhome [RDBMS_HOME]

[oracle@vrh1 tmp]$ 9413827/custom/server/9413827/custom/scripts/prepatch.sh -dbhome $ORACLE_HOME

9413827/custom/server/9413827/custom/scripts/prepatch.sh completed successfully.

###########################################################################

6.实际apply patch

以GI/CRS拥有者用户执行以下命令

% opatch napply -local -oh [CRS_HOME] -id 9413827

su - grid

cd /tmp/9413827/

opatch napply -local -oh $CRS_HOME -id 9413827

Invoking OPatch 11.2.0.1.6

Oracle Interim Patch Installer version 11.2.0.1.6

Copyright (c) 2011, Oracle Corporation. All rights reserved.

UTIL session

Oracle Home : /g01/11.2.0/grid

Central Inventory : /g01/oraInventory

from : /etc/oraInst.loc

OPatch version : 11.2.0.1.6

OUI version : 11.2.0.1.0

Log file location : /g01/11.2.0/grid/cfgtoollogs/opatch/opatch2011-09-04_20-52-37PM.log

Verifying environment and performing prerequisite checks...

OPatch continues with these patches: 9413827

Do you want to proceed? [y|n]

y

User Responded with: Y

All checks passed.

Provide your email address to be informed of security issues, install and

initiate Oracle Configuration Manager. Easier for you if you use your My

Oracle Support Email address/User Name.

Visit http://www.oracle.com/support/policies.html for details.

Email address/User Name:

You have not provided an email address for notification of security issues.

Do you wish to remain uninformed of security issues ([Y]es, [N]o) [N]: y

Please shutdown Oracle instances running out of this ORACLE_HOME on the local system.

(Oracle Home = '/g01/11.2.0/grid')

Is the local system ready for patching? [y|n]

y

User Responded with: Y

Backing up files...

Applying interim patch '9413827' to OH '/g01/11.2.0/grid'

Patching component oracle.crs, 11.2.0.1.0...

Patches 9413827 successfully applied.

Log file location: /g01/11.2.0/grid/cfgtoollogs/opatch/opatch2011-09-04_20-52-37PM.log

OPatch succeeded.

以DB/RDBMS拥有者用户执行以下命令

su - oracle

cd /tmp/9413827/

% opatch napply custom/server/ -local -oh [RDBMS_HOME] -id 9413827

opatch napply custom/server/ -local -oh $ORACLE_HOME -id 9413827

Verifying the update...

Inventory check OK: Patch ID 9413827 is registered in Oracle Home inventory with proper meta-data.

Files check OK: Files from Patch ID 9413827 are present in Oracle Home.

Running make for target install

Running make for target install

The local system has been patched and can be restarted.

UtilSession: N-Apply done.

OPatch succeeded.

###########################################################################

7. 配置HOME目录

以root用户执行以下命令

chmod +w $CRS_HOME/log/[nodename]/agent

chmod +w $CRS_HOME/log/[nodename]/agent/crsd

以DB/RDBMS拥有者用户执行以下命令

su - oracle

cd /tmp/9413827/

% custom/server/9413827/custom/scripts/postpatch.sh -dbhome [RDBMS_HOME]

[oracle@vrh1 9413827]$ custom/server/9413827/custom/scripts/postpatch.sh -dbhome $ORACLE_HOME

Reading /s01/orabase/product/11.2.0/dbhome_1/install/params.ora..

Reading /s01/orabase/product/11.2.0/dbhome_1/install/params.ora..

Parsing file /s01/orabase/product/11.2.0/dbhome_1/bin/racgwrap

Parsing file /s01/orabase/product/11.2.0/dbhome_1/bin/srvctl

Parsing file /s01/orabase/product/11.2.0/dbhome_1/bin/srvconfig

Parsing file /s01/orabase/product/11.2.0/dbhome_1/bin/cluvfy

Verifying file /s01/orabase/product/11.2.0/dbhome_1/bin/racgwrap

Verifying file /s01/orabase/product/11.2.0/dbhome_1/bin/srvctl

Verifying file /s01/orabase/product/11.2.0/dbhome_1/bin/srvconfig

Verifying file /s01/orabase/product/11.2.0/dbhome_1/bin/cluvfy

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/racgwrap

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/srvctl

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/srvconfig

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/cluvfy

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/racgmain

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/racgeut

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/diskmon.bin

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/lsnodes

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/osdbagrp

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/rawutl

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/srvm/admin/ractrans

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/srvm/admin/getcrshome

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/gnsd

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/bin/crsdiag.pl

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/lib/libhasgen11.so

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/lib/libclsra11.so

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/lib/libdbcfg11.so

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/lib/libocr11.so

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/lib/libocrb11.so

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/lib/libocrutl11.so

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/lib/libuini11.so

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/lib/librdjni11.so

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/lib/libgns11.so

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/lib/libgnsjni11.so

Reapplying file permissions on /s01/orabase/product/11.2.0/dbhome_1/lib/libagfw11.so

###########################################################################

8.以root用户重启CRS进程

# $CRS_HOME/crs/install/rootcrs.pl -patch

2011-09-04 21:03:32: Parsing the host name

2011-09-04 21:03:32: Checking for super user privileges

2011-09-04 21:03:32: User has super user privileges

Using configuration parameter file: /g01/11.2.0/grid/crs/install/crsconfig_params

CRS-4123: Oracle High Availability Services has been started.

# $ORACLE_HOME/bin/srvctl start home -o $ORACLE_HOME -s $STATUS_FILE -n nodename

###########################################################################

9. 使用opatch命令确认补丁安装成功

opatch lsinventory -detail -oh $CRS_HOME

opatch lsinventory -detail -oh $RDBMS_HOME

###########################################################################

10. 在其他节点上重复以上步骤,直到在所有节点上成功安装该补丁

###########################################################################

注意AIX平台上有额外的注意事项:

# Special Instruction for AIX

# ---------------------------

#

# During the application of this patch should you see any errors with regards

# to files being locked or opatch being unable to copy files then this

#

# could be as result of a process which requires termination or an additional

#

# file needing to be unloaded from the system cache.

#

#

# To try and identify the likely cause please execute the following commands

#

# and provide the output to your support representative, who will be able to

#

# identify the corrective steps.

#

#

# genld -l | grep [CRS_HOME]

#

# genkld | grep [CRS_HOME] ( full or partial path will do )

#

#

# Simple Case Resolution:

#

# If genld returns data then a currently executing process has something open

# in

# the [CRS_HOME] directory, please terminate the process as

# required/recommended.

#

#

# If genkld return data then please remove the enteries from the

# OS system cache by using the slibclean command as root;

#

#

# slibclean

#

###########################################################################

#

# Patch Deinstallation Instructions:

# ----------------------------------

#

# To roll back the patch, follow all of the above steps 1-5. In step 6,

# invoke the following opatch commands to roll back the patch in all homes.

#

# % opatch rollback -id 9413827 -local -oh [CRS_HOME]

#

# % opatch rollback -id 9413827 -local -oh [RDBMS_HOME]

#

# Afterwards, continue with steps 7-9 to complete the procedure.

#

###########################################################################

#

# If you have any problems installing this PSE or are not sure

# about inventory setup please call Oracle support.

#

###########################################################################

正式升级GI到11.2.0.2

1. 解压软件包,如上所述第三个zip包为grid软件

unzip p10098816_112020_Linux-x86-64_3of7.zip

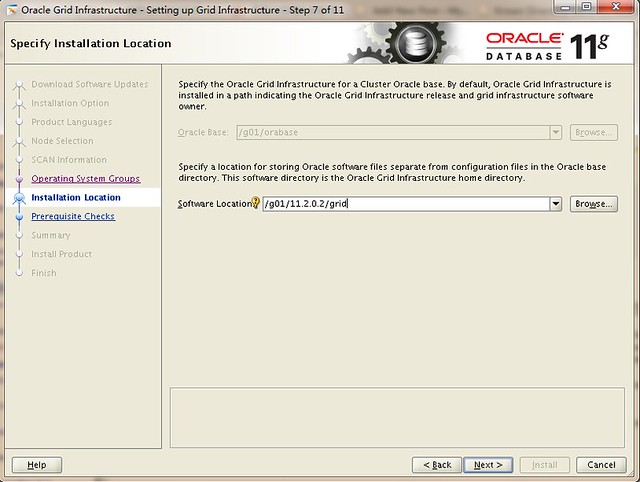

2. 以GI拥有者用户启动GI/CRS的OUI安装界面,并选择Out of Place的安装目录

(grid)$ unset ORACLE_HOME ORACLE_BASE ORACLE_SID

(grid)$ export DISPLAY=:0

(grid)$ cd /u01/app/oracle/patchdepot/grid

(grid)$ ./runInstaller

Starting Oracle Universal Installer…

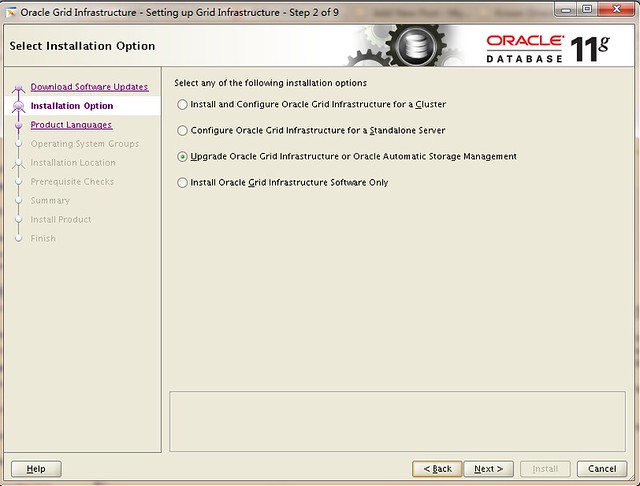

在”Select Installation Options”屏幕中选择Upgrade Oracle Grid Infrastructure or Oracle Automatic Storage Management

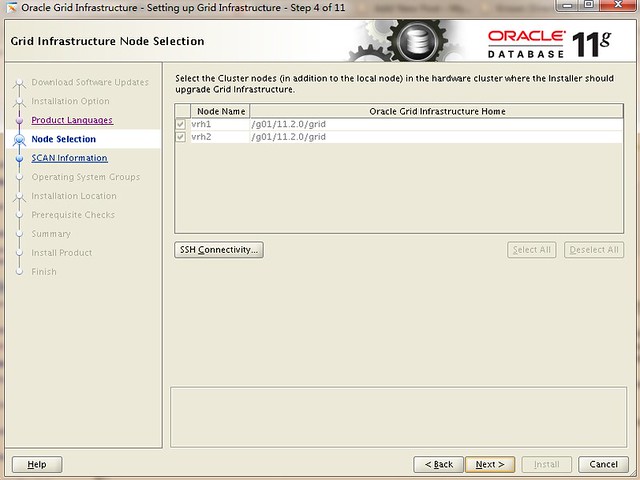

选择不同于现有GI软件的目录

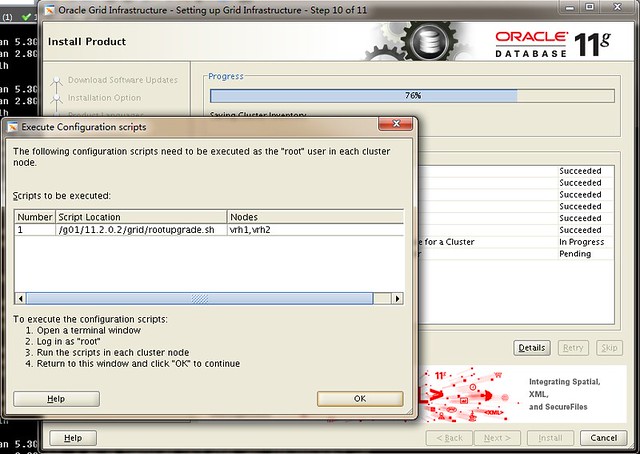

完成安装后会提示要以root用户执行rootupgrade.sh

3. 注意在正式执行rootupgrade.sh之前数据库服务在所有节点上都是可用的,而在执行rootupgrade.sh脚本期间,本地节点的CRS将短暂关闭,也就是说滚动升级期间至少有一个节点不用

因为unpublished bug 10011084 and unpublished bug 10128494的关系,在执行rootupgrade.sh之前需要修改crsconfig_lib.pm参数文件,修改方式如下:

cp $NEW_CRS_HOME/crs/install/crsconfig_lib.pm $NEW_CRS_HOME/crs/install/crsconfig_lib.pm.bak vi $NEW_CRS_HOME/crs/install/crsconfig_lib.pm 从以上配置文件中修改如下行,并使用diff命令确认 From @cmdout = grep(/$bugid/, @output); To @cmdout = grep(/(9655006|9413827)/, @output); From my @exp_func = qw(check_CRSConfig validate_olrconfig validateOCR To my @exp_func = qw(check_CRSConfig validate_olrconfig validateOCR read_file $ diff crsconfig_lib.pm.orig crsconfig_lib.pm 699c699 < my @exp_func = qw(check_CRSConfig validate_olrconfig validateOCR --- > my @exp_func = qw(check_CRSConfig validate_olrconfig validateOCR read_file 13277c13277 < @cmdout = grep(/$bugid/, @output); --- > @cmdout = grep(/(9655006|9413827)/, @output); cp /g01/11.2.0.2/grid/crs/install/crsconfig_lib.pm /g01/11.2.0.2/grid/crs/install/crsconfig_lib.pm.bak 并在所有节点上复制该配置文件 scp /g01/11.2.0.2/grid/crs/install/crsconfig_lib.pm vrh2:/g01/11.2.0.2/grid/crs/install/crsconfig_lib.pm

如果觉得麻烦,那么也可以直接从这里下载修改好的crsconfig_lib.pm。

由于 bug 10056593 和 bug 10241443 的缘故执行rootupgrde.sh的过程中还可能出现以下错误

Due to bug 10056593, rootupgrade.sh will report this error and continue. This error is ignorable.

Failed to add (property/value):('OLD_OCR_ID/'-1') for checkpoint:ROOTCRS_OLDHOMEINFO.Error code is 256

Due to bug 10241443, rootupgrade.sh may report the following error when installing the cvuqdisk package.

This error is ignorable.

ls: /usr/sbin/smartctl: No such file or directory

/usr/sbin/smartctl not found.

以上错误可以被忽略,不会影响到升级。

4.正式执行rootupgrade.sh脚本,建议从负载较高的节点开始

[root@vrh1 grid]# /g01/11.2.0.2/grid/rootupgrade.sh

Running Oracle 11g root script...

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /g01/11.2.0.2/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /g01/11.2.0.2/grid/crs/install/crsconfig_params

Creating trace directory

Failed to add (property/value):('OLD_OCR_ID/'-1') for checkpoint:ROOTCRS_OLDHOMEINFO.Error code is 256

ASM upgrade has started on first node.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'vrh1'

CRS-2673: Attempting to stop 'ora.crsd' on 'vrh1'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'vrh1'

CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'vrh1'

CRS-2673: Attempting to stop 'ora.SYSTEMDG.dg' on 'vrh1'

CRS-2673: Attempting to stop 'ora.registry.acfs' on 'vrh1'

CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.vrh1.vip' on 'vrh1'

CRS-2677: Stop of 'ora.vrh1.vip' on 'vrh1' succeeded

CRS-2672: Attempting to start 'ora.vrh1.vip' on 'vrh2'

CRS-2677: Stop of 'ora.registry.acfs' on 'vrh1' succeeded

CRS-2676: Start of 'ora.vrh1.vip' on 'vrh2' succeeded

CRS-2677: Stop of 'ora.SYSTEMDG.dg' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'vrh1'

CRS-2677: Stop of 'ora.asm' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.ons' on 'vrh1'

CRS-2673: Attempting to stop 'ora.eons' on 'vrh1'

CRS-2677: Stop of 'ora.ons' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.net1.network' on 'vrh1'

CRS-2677: Stop of 'ora.net1.network' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.eons' on 'vrh1' succeeded

CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'vrh1' has completed

CRS-2677: Stop of 'ora.crsd' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.mdnsd' on 'vrh1'

CRS-2673: Attempting to stop 'ora.cssdmonitor' on 'vrh1'

CRS-2673: Attempting to stop 'ora.ctssd' on 'vrh1'

CRS-2673: Attempting to stop 'ora.evmd' on 'vrh1'

CRS-2673: Attempting to stop 'ora.asm' on 'vrh1'

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'vrh1'

CRS-2677: Stop of 'ora.cssdmonitor' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.mdnsd' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.evmd' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.ctssd' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.drivers.acfs' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.asm' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'vrh1'

CRS-2677: Stop of 'ora.cssd' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.gpnpd' on 'vrh1'

CRS-2673: Attempting to stop 'ora.diskmon' on 'vrh1'

CRS-2677: Stop of 'ora.diskmon' on 'vrh1' succeeded

CRS-2677: Stop of 'ora.gpnpd' on 'vrh1' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'vrh1'

CRS-2677: Stop of 'ora.gipcd' on 'vrh1' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'vrh1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

Successfully deleted 1 keys from OCR.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

OLR initialization - successful

Adding daemon to inittab

ACFS-9200: Supported

ACFS-9300: ADVM/ACFS distribution files found.

ACFS-9312: Existing ADVM/ACFS installation detected.

ACFS-9314: Removing previous ADVM/ACFS installation.

ACFS-9315: Previous ADVM/ACFS components successfully removed.

ACFS-9307: Installing requested ADVM/ACFS software.

ACFS-9308: Loading installed ADVM/ACFS drivers.

ACFS-9321: Creating udev for ADVM/ACFS.

ACFS-9323: Creating module dependencies - this may take some time.

ACFS-9327: Verifying ADVM/ACFS devices.

ACFS-9309: ADVM/ACFS installation correctness verified.

clscfg: EXISTING configuration version 5 detected.

clscfg: version 5 is 11g Release 2.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

Preparing packages for installation...

cvuqdisk-1.0.9-1

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

最后执行rootupgrade.sh脚本的节点会出现以下GI/CRS成功升级的信息:

Successfully deleted 1 keys from OCR. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. OLR initialization - successful Adding daemon to inittab ACFS-9200: Supported ACFS-9300: ADVM/ACFS distribution files found. ACFS-9312: Existing ADVM/ACFS installation detected. ACFS-9314: Removing previous ADVM/ACFS installation. ACFS-9315: Previous ADVM/ACFS components successfully removed. ACFS-9307: Installing requested ADVM/ACFS software. ACFS-9308: Loading installed ADVM/ACFS drivers. ACFS-9321: Creating udev for ADVM/ACFS. ACFS-9323: Creating module dependencies - this may take some time. ACFS-9327: Verifying ADVM/ACFS devices. ACFS-9309: ADVM/ACFS installation correctness verified. clscfg: EXISTING configuration version 5 detected. clscfg: version 5 is 11g Release 2. Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. Started to upgrade the Oracle Clusterware. This operation may take a few minutes. Started to upgrade the CSS. Started to upgrade the CRS. The CRS was successfully upgraded. Oracle Clusterware operating version was successfully set to 11.2.0.2.0 ASM upgrade has finished on last node. Preparing packages for installation... cvuqdisk-1.0.9-1 Configure Oracle Grid Infrastructure for a Cluster ... succeeded

5. 确认GI/CRS的版本

su - grid $ crsctl query crs activeversion Oracle Clusterware active version on the cluster is [11.2.0.2.0] hostname www.askmac.cn /g01/11.2.0.2/grid/OPatch/opatch lsinventory -oh /g01/11.2.0.2/grid Invoking OPatch 11.2.0.1.1 Oracle Interim Patch Installer version 11.2.0.1.1 Copyright (c) 2009, Oracle Corporation. All rights reserved. Oracle Home : /g01/11.2.0.2/grid Central Inventory : /g01/oraInventory from : /etc/oraInst.loc OPatch version : 11.2.0.1.1 OUI version : 11.2.0.2.0 OUI location : /g01/11.2.0.2/grid/oui Log file location : /g01/11.2.0.2/grid/cfgtoollogs/opatch/opatch2011-09-05_02-17-19AM.log Patch history file: /g01/11.2.0.2/grid/cfgtoollogs/opatch/opatch_history.txt Lsinventory Output file location : /g01/11.2.0.2/grid/cfgtoollogs/opatch/lsinv/lsinventory2011-09-05_02-17-19AM.txt -------------------------------------------------------------------------------- Installed Top-level Products (1): Oracle Grid Infrastructure 11.2.0.2.0 There are 1 products installed in this Oracle Home.

6.更新bash_profile , 将CRS_HOME、ORACLE_HOME、PATH等变量指向新的GI目录