之前有网友问我在10g中通过RMAN 的duplicate target database命令复制数据库时是否需要先完成全库的备份。

实际我在10g中并不常用duplicate target database 来帮助创建DataGuard Standby Database,所以虽然记忆中仍有些印象,却不能十分确定地回答了。

今天查了一下资料,发现原来Active database duplication 和 Backup-based duplication 是11g才引入的特性,换句话说10g中duplication是要求预先完成数据库的RMAN backup备份的。

具体关于以上2个特性见文档<RMAN ‘Duplicate Database’ Feature in 11G>,引文如下:

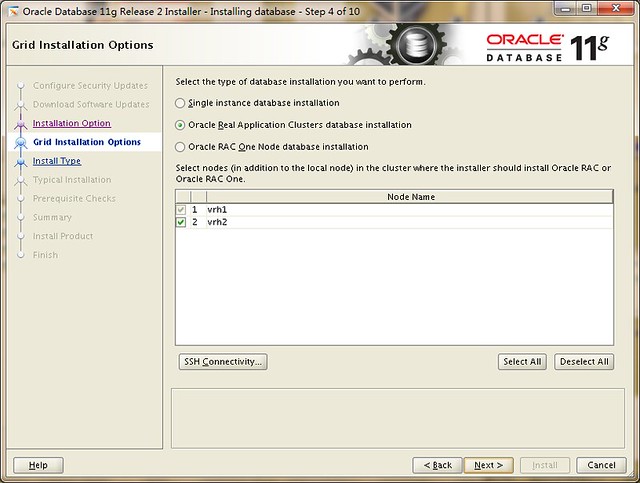

RMAN 'Duplicate Database' Feature in 11G You can create a duplicate database using the RMAN duplicate command. The duplicate database has a different DBID from the source database and functions entirely independently.Starting from 11g you can do duplicate database in 2 ways. 1. Active database duplication 2. Backup-based duplication Active database duplication copies the live target database over the network to the auxiliary destination and then creates the duplicate database.Only difference is that you don't need to have the pre-existing RMAN backups and copies. The duplication work is performed by an auxiliary channel. This channel corresponds to a server session on the auxiliary instance on the auxiliary host. As part of the duplicating operation, RMAN automates the following steps: 1. Creates a control file for the duplicate database 2. Restarts the auxiliary instance and mounts the duplicate control file 3. Creates the duplicate datafiles and recovers them with incremental backups and archived redo logs. 4. Opens the duplicate database with the RESETLOGS option For the active database duplication, RMAN does one extra step .i.e. copy the target database datafiles over the network to the auxiliary instance A RAC TARGET database can be duplicated as well. The procedure is the same as below. If the auxiliary instance needs to be a RAC-database as well, than start the duplicate procedure for to a single instance and convert the auxiliary to RAC after the duplicate has succeeded.

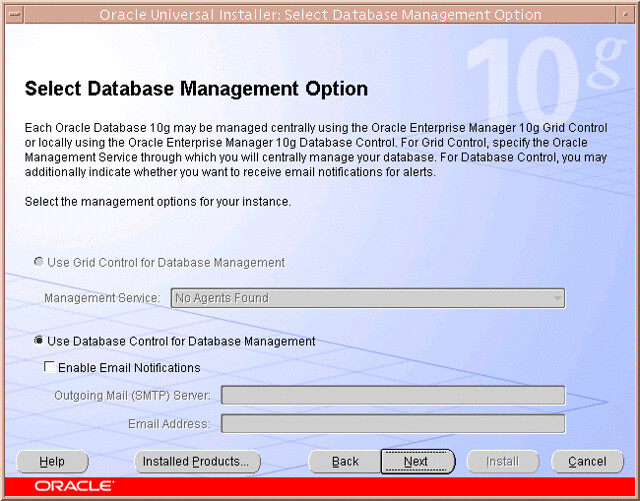

而在10g 中不仅需要对目标数据库进行备份,还需要手动将备份集(backupset)拷贝至目标主机上,这确实过于繁琐了:

Oracle10G RMAN Database Duplication If you are using a disk backup solution and duplicate to a remote node you must first copy the backupsets from the original hosts backup location to the same mount and path on the remote server. Because duplication uses auxiliary channels the files must be where the IO pipe is allocated. So the IO will take place on the remote node and disk backups must be locally available.